Features/Livebackup: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

'''Note: This feature proposal is archived and the code was not merged into QEMU. | {| class="wikitable" style="margin: 2em; border:1px solid black;" | ||

| | |||

'''Note: This feature proposal is archived and the code was not merged into QEMU. | |||

The [[Features/IncrementalBackup|Incremental Backup]] feature was merged into QEMU instead for the 2.4 development window.''' | |||

|} | |||

== Livebackup - A Complete Solution for making Full and Incremental Disk Backups of Running VMs == | == Livebackup - A Complete Solution for making Full and Incremental Disk Backups of Running VMs == | ||

Revision as of 16:55, 11 May 2015

|

Note: This feature proposal is archived and the code was not merged into QEMU. The Incremental Backup feature was merged into QEMU instead for the 2.4 development window. |

Livebackup - A Complete Solution for making Full and Incremental Disk Backups of Running VMs

Livebackup provides the ability for an administrator or a management server to use a livebackup_client program to connect to the qemu process and copy the disk blocks that were modified since the last backup was taken.

Contact

Jagane Sundar (jagane at sundar dot org)

Overview

The goal of this project is to add the ability to do full and incremental disk backups of a running VM. These backups will be transferred over a TCP connection to a backup server, and the virtual disk images will be reconstituted there. This project does not transfer the memory contents of the running VM, or the device states of emulated devices, i.e. livebackup is not VM suspend.

qemu is enhanced to maintain an in-memory dirty blocks bitmap for each virtual disk. A rudimentary network protocol for transferring the dirty blocks from qemu to another machine, possibly a dedicated backup server, is included. A new command line utility livebackup_client is developed. livebackup_client runs on the backup server, connects to qemu, transfers the dirty blocks, and updates or creates the backup version of all virtual disk images.

Livebackup introduces the notion of a livebackup snapshot that is created by qemu when the livebackup_client connects to qemu and is destroyed when all the dirty blocks have been transfered. When this snapshot is active, VM writes that would overwrite dirty blocks require the original blocks to be read and saved to a COW file.

Livebackup is fully self sufficient, and works with all types of disks and all disk images formats.

Source Code Repositories

Currently, Livebackup is available as an enhancement to both the qemu and qemu-kvm projects. The goal is to get Livebackup accepted by the qemu project, and then flow through to the qemu-kvm project.

Clone git://github.com/jagane/qemu-livebackup.git to get access to a clone of the qemu source tree with Livebackup enhancements.

Clone git://github.com/jagane/qemu-kvm-livebackup.git to get access to a clone of the qemu-kvm source tree with Livebackup enhancements.

Use Cases

Today IaaS cloud platforms such as EC2 provide you with the ability to have two types of virtual disks in VM instances

- ephemeral virtual disks that are lost if there is a hardware failure

- EBS storage volumes which are costly (EBS stands for Elastic Block Storage - Amazon EC2's block storage with availability guarantees)

I think that an efficient disk backup mechanism will enable a third type of virtual disk - one that is backed up, perhaps every hour or so. So a cloud operator using KVM virtual machines can offer three types of VMS:

- an ephemeral VM that is lost if a hardware failure happens

- a backed up VM that can be restored from the last hourly backup

- a fully highly available VM running off of shared storage.

High Level Design

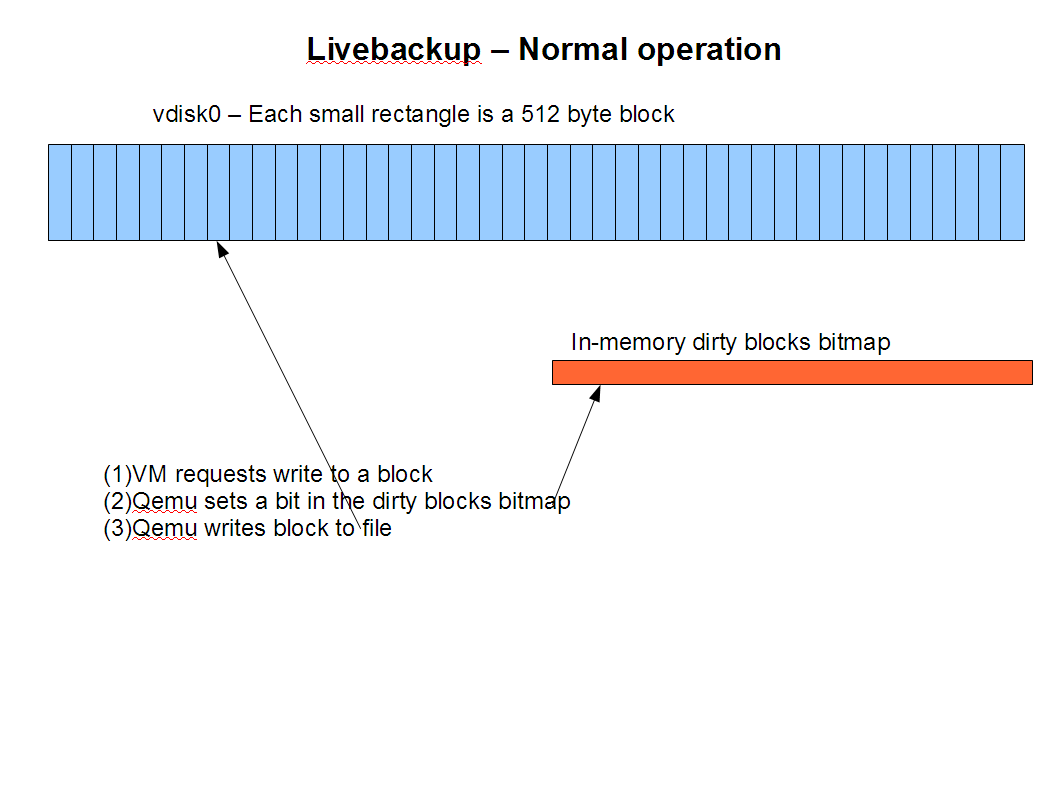

- During normal operation, livebackup code in qemu keeps an in-memory bitmap of blocks modified.

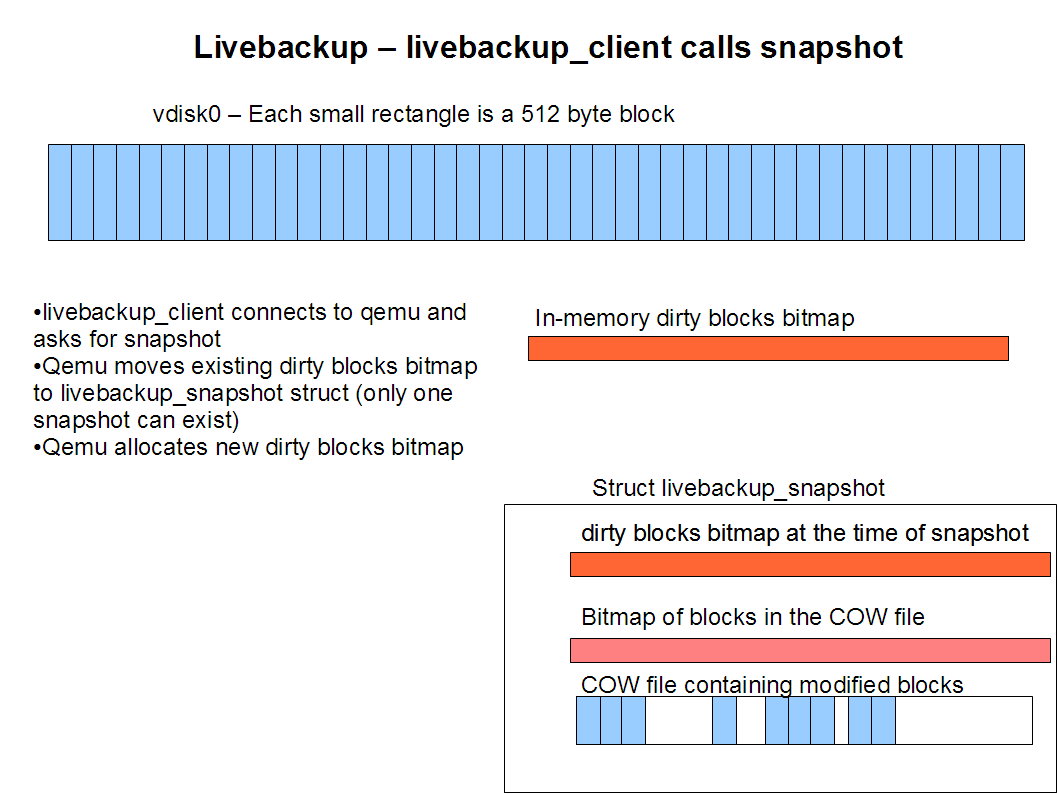

- When a backup client connects to the livebackup thread in qemu, it creates a snapshot

- A snapshot, in livebackup terms, is merely the dirty blocks bitmap of all virtual drives configured for the VM

- After the snapshot is created, livebackup_client starts to transfer the modified blocks over to the backup server

- The snapshot is maintained during the time that this transfer happens, after which it is destroyed.

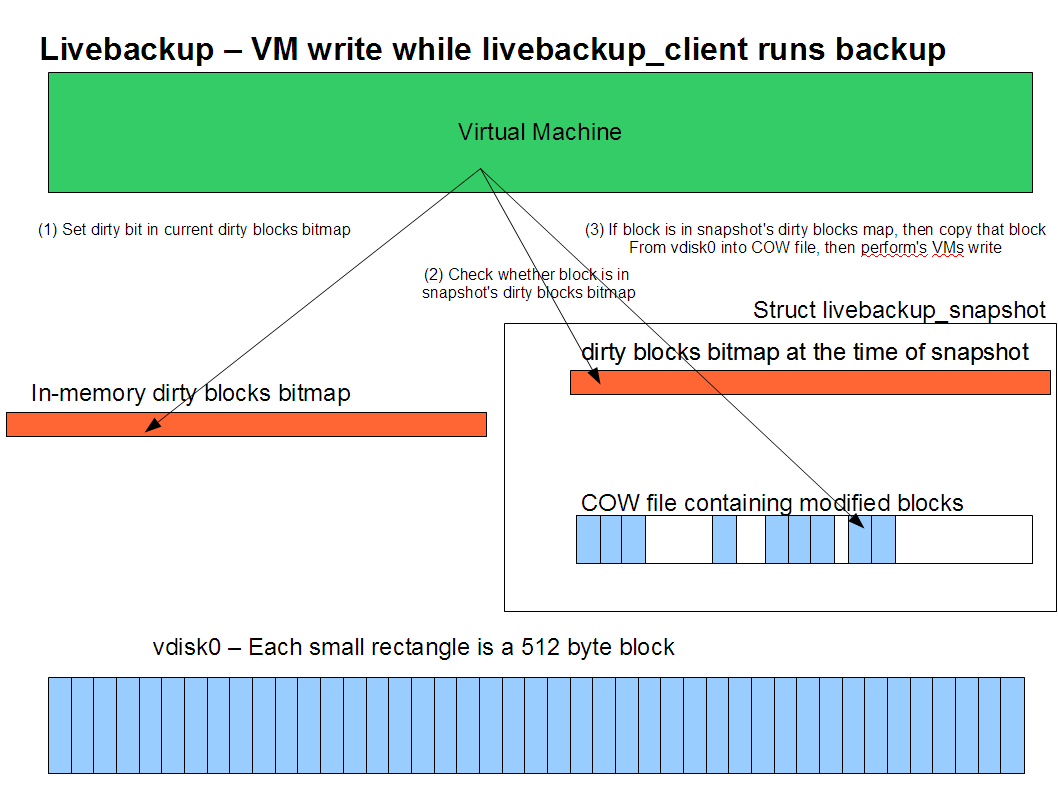

- Note that when this snapshot is active, any write by the VM to the underlying virtual disk has to be intercepted, and if the write overlaps a block that is marked as dirty in the snapshot's livebackup dirty blocks bitmap, then the blocks from the underlying virtual disk have to be copied over to a COW file in the snapshot, before being overwritten.

Usage Example

In the following example, note the new command line parameters - livebackup_port, livebackup_dir and livebackup=on

# ./x86_64-softmmu/qemu-system-x86_64 -drive file=/dev/kvm_vol_group/kvm_root_part,boot=on,if=virtio,livebackup=on -drive file=/dev/kvm_vol_group/kvm_disk1,if=virtio,livebackup=on -m 512 -net nic,model=virtio,macaddr=52:54:00:00:00:01 -net tap,ifname=tap0,script=no,downscript=no -vnc 0.0.0.0:1000 -usb -usbdevice tablet -livebackup_dir /root/kvm/livebackup -livebackup_port 7900

- In the above example, the KVM VM has two drives /dev/kvm_vol_group/kvm_root_part and /dev/kvm_vol_group/kvm_disk1. livebackup is enabled since the drive parameters include livebackup=on.

- The command line parameter "-livebackup_port 7900" means qemu's livebackup thread listens on port 7900 for connections from the livebackup_client

- The command line parameter "-livebackup_dir /root/kvm/livebackup" causes qemu's livebackup logic to store COW files, dirty bitmap files, etc. in this directory.

The following is the client used to invoke livebackup client:

# livebackup_client /root/kvm-backup 192.168.1.220 7900

When this command is invoked, livebackup_client connects to the qemu process on machine 192.168.1.220 listening on port 7900 and transfers over the dirty blocks and creates virtual disk images in the local directory /root/kvm-backup

Illustrations

Normal Operation

livebackup_client calls snapshot before transferring dirty blocks

While a backup operation is in progress

Details

- Two new command line parameters and one additional parameter to the drive descriptor are added:

- livebackup_dir: specifies the directory where livebackup COW files, conf files and dirty bitmap files are stored

- livebackup_port: specifies the TCP port at which qemu's livebackup thread listens

- livebackup=on: This is a paramter to a drive descriptor that turns on livebackup for that virtual disk

- When qemu block driver is called to open a virtual disk file, it checks whether the livebackup=on is specified for that disk.

- If livebackup is enabled, then this disk is part of the backup set, and the block driver for that virtual disk starts tracking blocks that are modified using an in-memory dirty blocks bitmap.

- This in-memory dirty blocks bitmap is saved to a file when the VM shuts down. Thus this dirty blocks bitmap is persisted across VM reboots. It is used in-memory when the VM is running, but saved to disk when the VM shuts down, and read in again when the VM boots.

- qemu starts a livebackup thread, that listens on a TCP port for connections from livebackup_client

- When the operator wants to take an incremental backup of the running VM, he uses the program livebackup_client. This program opens a TCP connection to the qemu process' livebackup thread.

- First, the livebackup_client issues a snapshot command.

- qemu saves the dirty blocks bitmap of each virtual disk in a snapshot struct, and allocates new in-memory dirty blocks map for each virtual disk

- From now on, till the livebackup_client destroys the snapshot, each write from the VM is checked by the livebackup interposer. If the blocks written are already marked as dirty in the snapshot struct's dirty blocks bitmap, the original blocks are saved off in a COW file before the VM write is allowed to proceed.

- The livebackup_client now iterates through all the dirty blocks in the snapshot, and transfers them over to the backup server. It can either reconstitute the virtual disk image at the time of the backup by writing the blocks to the virtual disk image file, or can save the blocks in a COW redo file of qcow, qcow2 or vmdk format.

- The important thing to note is that the time for which qemu needs to copy the original blocks and save them in a COW file is equal to the time that the livebackup_client program is connected to qemu and transferring blocks. This is hopefully something like 15 minutes out of 24 hours or so.

Comparison to other proposed qemu features that solve the same problem

Snapshots and Snapshots2

snapshots

snapshots2

In both of these proposals, the original virtual disk is made read-only and the VM writes to a different COW file. After backup of the original virtual disk file is complete, the COW file is merged with the original vdisk file.

Instead, I create an Original-Blocks-COW-file to store the original blocks that are overwritten by the VM everytime the VM performs a write while the backup is in progress. Livebackup copies these underlying blocks from the original virtual disk file before the VM's write to the original virtual disk file is scheduled. The advantage of this is that there is no merge necessary at the end of the backup, we can simply delete the Original-Blocks-COW-file.

Here's Stefan Hajnoczi's feedback (Thanks, Stefan)

Here's what I understand:

1. User takes a snapshot of the disk, QEMU creates old-disk.img backed by the current-disk.img.

2. Guest issues a write A.

3. QEMU reads B from current-disk.img.

4. QEMU writes B to old-disk.img.

5. QEMU writes A to current-disk.img.

6. Guest receives write completion A.

The tricky thing is what happens if there is a failure after Step 5. If writes A and B were unstable writes (no fsync()) then no ordering is guaranteed and perhaps write A reached current-disk.img but write B did not reach old-disk.img. In this case we no longer have a consistent old-disk.img snapshot - we're left with an updated current-disk.img and old-disk.img does not have a copy of the old data.

The solution is to fsync() after Step 4 and before Step 5 but this will hurt performance. We now have an extra read, write, and fsync() on every write.

Failure Scenarios

qemu crashes during normal operation of the VM

When this happens, the livebackup_client is forced to do a full backup the next time around. Here's how: livebackup writes out the in-memory dirty bitmap to a dirty bitmap file only at the time of orderly shutdown of qemu. Hence, the mtime of the virtual disk file is later than the mtime of the livebackup dirty bitmap file. This causes livebackup to consider the dirty bitmap invalid, and forces the livebackup_client to do a full backup next time around.

qemu crashes while livebackup is in progress

In this case also, the livebackup_client is forced to do a full backup the next time around. The dirty bitmap file, the COW file used to store blocks written while a livebackup is in progress, are all deleted, and the livebackup client is forced to do a full backup next time around.

livebackup_client crashes while livebackup is in progress

In this case, a new livebackup_client may be started, and it can redo the last type of backup it was doing - an incremental backup or a full backup. Note all the blocks of the last backup type need to be transferred over again, the qemu livebackup code does not keep track of what block the client was at. It does not need to be a forced full backup.

Testing methodology

The basic guarantee that Livebackup provides is this: A backup of all the virtual disks of a VM will be created such that the backup virtual disk images will be a bit for bit match of the virtual disk images at the point in time when the livebackup_client program issues a 'create snapshot' command. Once the Livebackup code was written, I performed a full backup followed by several hundred incremental backups, while the VM was performing intense disk I/O. The backup disk images booted fine, and the data seemed good. However, this is proof of nothing. To actually state with certainty that the images were a bit for bit match, I came up with the following technique:

- I created a VM with two virtual disks, each of which was a LVM logical volume in the VM server. In my experiments, these were /dev/kvm_vol_group/kvm_root_part and /dev/kvm_vol_group/kvm_disk1

- I added code to my implementation of do_snap in livebackup.c, such that after the livebackup snapshot is created, I would invoke lvcreate to create a LVM snapshot of the underlying LVM logical volume. E.g. the command would something like:

# /sbin/lvcreate -L1G -s -n kvm_root_part_backup /dev/kvm_vol_group/kvm_root_part

- I would run the livebackup_client on the same machine as the server, and create a backup of the virtual disk images on the same machine.

- Next, it is a simple matter of running cmp to compare the backup disk image file and the snapshot logical volume created. e.g:

# cmp /dev/kvm_vol_group/kvm_root_part_backup kvm_root_part # cmp /dev/kvm_vol_group/kvm_disk1_backup ./kvm_disk1

Other technologies that may be used to solve this problem

LVM snapshots

It is possible to create a new LVM partition for each virtual disk in the VM. When a VM needs to be backed up, each of these LVM partitions is snapshotted. At this point things get messy - I don't really know of a good way to identify the blocks that were modified since the last backup. Also, once these blocks are identified, we need a mechanism to transfer them over a TCP connection to the backup server. Perhaps a way to export the 'dirty blocks' map to userland and use a deamon to transfer the block. Or maybe a kernel thread capable of listening on TCP sockets and transferring the blocks over to the backup client (I don't know if this is possible).