Documentation/9p

9pfs Developers Documentation

This page is intended for developers who want to put their hands onto the 9p passthrough filesystem implementation in QEMU. For regular user aspects you rather want to look at the separate page Documentation/9psetup instead.

9p Protocol

9pfs uses the Plan 9 network protocol for communicating the file I/O operations between guest systems (clients) and the 9p server (see below). There are a bunch of separate documents specifying different variants of the protocol, which might be a bit confusing at first, so here is a summary of the individual protocol flavours.

Introduction

If this is your first time getting in touch with the 9p protocol then you might have a look at this introduction by Eric Van Hensbergen which is an easy understandable text including some basic examples of how the protocol works with examples of individual requests and their reponse messages: Using 9P2000 Under Linux

There are currently 3 dialects of the 9p network protocol called "9p2000", "9p2000.u" and "9p2000.L". Note that QEMU's 9pfs implementation only supports either "9p2000.u" or "9p2000.L".

9p2000

This is the basis of the 9p protocol the other two dialects derive from. This is the specification of the protocol: 9p2000 Protocol

9p2000.u

The "9p2000.u" dialect adds extensions and minor adjustments to the protocol for Unix systems, especially for common data types available on a Unix system. For instance the basic "9p2000" only returns an error text if some error occurred on server side, whereas "9p2000.u" also returns the common POSIX error code for the individual error. 9p2000.u Protocol

9p2000.L

Similar to the "9p2000.u" dialect, the "9p2000.L" dialect adds extensions and minor adjustments of the protocol specifically for Linux systems. Again this mostly targeted for specializing at data types available on a Linux system. 9p2000.L Protocol

Topology

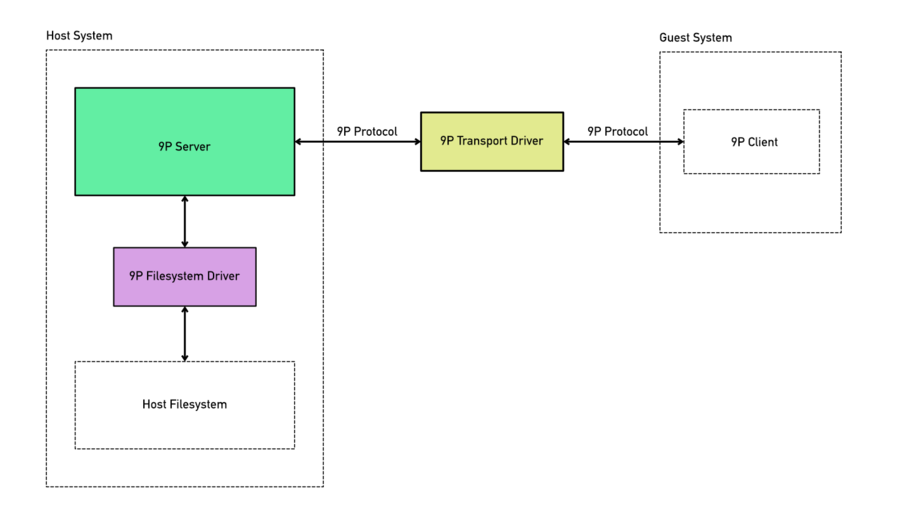

The following figure shows the basic structure of the 9pfs implementation in QEMU.

The implementation consists of 3 modular components: 9p server, 9p filesystem drivers and 9p transport drivers.

9p Server

This is the controller portion of the 9pfs code base which handles the raw 9p network protocol handling, and the general high-level control flow of 9p clients' (the guest systems) 9p requests. The 9p server is basically a full-fledged file server and accordingly it has the highest code complexity in the 9pfs code base, most of this is in hw/9pfs/9p.c source file.

9p Filesystem Drivers

The 9p server uses a VFS layer for the actual file operations, which makes it flexible from where the file storage data comes from and how exactly that data is actually accessed. There are currently 3 different 9p file system driver implementations available:

1. local fs driver

This is the most common fs driver which is used the most with 9p in practice. It basically just maps the individual VFS functions (more or less) directly to the host system's file system functions like open(), read(), write(), etc. You find this fs driver implementation in hw/9pfs/9p-local.c source file.

2. proxy fs driver

This fs driver was supposed to dispatch the VFS functions to be called from a separate process (by virtfs-proxy-helper), however the "proxy" driver is currently not considered to be production grade.

3. synth fs driver

This fs driver is only used for development purposes. It just simulates individual filesystem operations and therefore is not useful for anything on a production system. The main purpose of the "synth" fs driver is to simulate certain fs behaviours that would be hard to trigger with a regular fs driver like the "local" fs driver for instance. Right now the synth fs driver is used by the automated 9pfs test cases and by the automated 9pfs fuzzing code. The automated test cases for instance use the "synth" fs driver for instance to check the 9p server's correct behaviour on 9p Tflush requests which a client may send to abort a file I/O operation that might already be blocking for a long time. In general the "synth" driver is very useful for simulating any multi-threaded use cases.

9p Transport Drivers

The third component of the 9pfs implementation in QEMU is the "transport" driver, which is the communication channel between host system and guest system used by the 9p server. There are currently two 9p transport driver implementations available in QEMU:

1. virtio transport driver

The 9p "virtio" transport driver uses e.g. a virtual PCI device and ontop the virtio protocol to transfer the 9p messages between clients (guest systems) and 9p server (host system).

2. Xen transport driver

TODO

Test Cases

Whatever you are doing there on the 9pfs code base, please run the automated test cases after you modified the source code to ensure that your changes did not break the expected behaviour of 9pfs. Running the tests is very simple and does not require any guest OS installation, no guest OS needs to be booted, and for that reason you can run them in few seconds. The test cases are also a very efficient way to check whether your 9pfs changes are actually doing what you had intended while still coding.

To run the 9pfs tests e.g. on a x86 system, all you need to do is executing the following two commands:

export QTEST_QEMU_BINARY=x86_64-softmmu/qemu-system-x86_64

tests/qtest/qos-test

All 9pfs test cases are in tests/qtest/virtio-9p-test.c source file.

As you can see at the end of the virtio-9p-test.c file, the 9pfs test cases are split into two groups of tests. The first group of tests use the "synth" fs driver, so all file I/O operations are simulated and basically you can add all kinds of hacks into the synth driver to simulate whatever you need to test certain fs behaviours.

The seconds group of tests use the "local" fs driver, so they are actually operating on real dirs and files in a test directory on the host filesystem. Some issues that happened in the past were caused by a combination of the 9p server and the actual "local" fs driver that's usually used on production machines. For that reason this group of tests are covering issues hat happen across these two components of 9pfs.

Contribute

Please refer to Contribute/SubmitAPatch for instructions about how to send your patches.