Internships/ProjectIdeas/TCGCodeQuality: Difference between revisions

| (71 intermediate revisions by one other user not shown) | |||

| Line 8: | Line 8: | ||

'''Summary:''' in most applications, the majority of the execution time is spent in a very small portion of code. Regions of a code which have high-frequency execution are called hot while all other regions are called cold. As a direct consequence, emulators also spent most of their execution time emulating these hot regions and, so, dynamic compilers and translators need to pay extra attention to them. To guarantee that these hot regions are compiled/translated generating high-quality code is fundamental to achieve a final high-performance emulation. Thus, one of the most important steps in tuning an emulator performance is to identify which are the hot regions and to measure their translation quality. | '''Summary:''' in most applications, the majority of the execution time is spent in a very small portion of code. Regions of a code which have high-frequency execution are called hot while all other regions are called cold. As a direct consequence, emulators also spent most of their execution time emulating these hot regions and, so, dynamic compilers and translators need to pay extra attention to them. To guarantee that these hot regions are compiled/translated generating high-quality code is fundamental to achieve a final high-performance emulation. Thus, one of the most important steps in tuning an emulator performance is to identify which are the hot regions and to measure their translation quality. | ||

== | == TBStatistics (TBS) == | ||

Improving the code generation of the TCG backend is a hard task that involves reading through large amounts of text looking for anomalies in the generated code. It would be nice to have tools to more readily extract and parse code generation information. This would include options to dump: | Improving the code generation of the TCG backend is a hard task that involves reading through large amounts of text looking for anomalies in the generated code. It would be nice to have tools to more readily extract and parse code generation information. This would include options to dump: | ||

| Line 14: | Line 14: | ||

* Translation counters: | * Translation counters: | ||

** The number of times a TB has been translated, uncached and spanned. | ** The number of times a TB has been translated, uncached and spanned. | ||

** The amount of time spent doing such translations and optimizations. | |||

* Code quality metrics: | * Code quality metrics: | ||

** The number of TB guest, IR (TCG ops), and host instructions. | ** The number of TB guest, IR (TCG ops), and host instructions. | ||

** The Number of spills during the register allocation. | ** The Number of spills during the register allocation. | ||

For that reason, we collect all this information dynamically for | For that reason, we collect all this information dynamically for every TB or for a specific set of TBs and store it on TBStatistics structures. Every TB can have one TBStatistics linked to it by a new field inserted in the TranslationBlock structure[https://github.com/vandersonmr/gsoc-qemu/blob/bf77a21e67cfc76b9730905dbdd4df2654a3b54a/include/exec/exec-all.h#L451]. Moreover, TBStatistics are not flushed during tb_flush and they survive longer being able to be relinked to retranslated TBs using their keys (phys_pc, pc, flags, cs_base) to matches new TBs and their TBStats. | ||

Not all stats in a TBStatistics need to be being collected. The stats_enabled flag can be used to set which stats are going to be collected/are enabled to a particular TBStatistics. The possible flags can be found in quemu-commons.h and they are: TB_NOTHING, TB_EXEC_STATS (to collect code and translations stats), TB_JIT_STATS (to collect executions stats), TB_JIT_TIME (to collect time stats) and TB_PAUSED. | |||

struct TBStatistics { | struct TBStatistics { | ||

tb_page_addr_t phys_pc; | |||

target_ulong pc; | |||

uint32_t flags; | |||

/* cs_base isn't included in the hash but we do check for matches */ | |||

target_ulong cs_base; | |||

uint32_t stats_enabled; | |||

/* Execution stats */ | |||

struct { | |||

unsigned long normal; | |||

unsigned long atomic; | |||

/* filled only when dumping x% cover set */ | |||

uint16_t coverage; | |||

} executions; | |||

struct { | |||

unsigned num_guest_inst; | |||

unsigned num_tcg_ops; | |||

unsigned num_tcg_ops_opt; | |||

unsigned spills; | |||

/* CONFIG_PROFILE */ | |||

unsigned temps; | |||

unsigned deleted_ops; | |||

unsigned in_len; | |||

unsigned out_len; | |||

unsigned search_out_len; | |||

} code; | |||

struct { | |||

unsigned long total; | |||

unsigned long spanning; | |||

} translations; | |||

struct { | |||

int64_t restore; | |||

uint64_t restore_count; | |||

int64_t interm; | |||

int64_t code; | |||

int64_t opt; | |||

int64_t la; | |||

} time; | |||

/* HMP information - used for referring to previous search */ | |||

int display_id; | |||

/* current TB linked to this TBStatistics */ | |||

TranslationBlock *tb; | |||

}; | }; | ||

=== Creating and Storing TBStats === | === Creating and Storing TBStats === | ||

When a TB is going to be | When a TB is going to be generated by calling tb_gen_code, we check if tbstats collection is enabled and if the current TB is not uncached. If so, we call tb_get_stats which either creates a new TBStatistics for that specific pc, phys_pc and flag or returns an already existing one (stored in a qht hash table in TBContext). Then, we get the default flags set through HMP or -d tb_stats argument. This default value will be used to set what stats are going to be collected for that TB. | ||

/* | |||

* We want to fetch the stats structure before we start code | |||

* generation so we can count interesting things about this | |||

* generation. | |||

*/ | |||

if (tb_stats_collection_enabled() && !(tb->cflags & CF_NOCACHE)) { | |||

tb->tb_stats = tb_get_stats(phys_pc, pc, cs_base, flags, tb); | |||

uint32_t flag = get_default_tbstats_flag(); | |||

if (qemu_log_in_addr_range(tb->pc)) { | |||

if (flag & TB_EXEC_STATS) { | |||

tb->tb_stats->stats_enabled |= TB_EXEC_STATS; | |||

} | |||

} | |||

if (flag & TB_JIT_STATS) { | |||

tb->tb_stats->stats_enabled |= TB_JIT_STATS; | |||

atomic_inc(&tb->tb_stats->translations.total); | |||

} | |||

if (flag & TB_JIT_TIME) { | |||

tb->tb_stats->stats_enabled |= TB_JIT_TIME; | |||

ti = profile_getclock(); | |||

} | |||

} else { | |||

tb->tb_stats = NULL; | |||

} | |||

=== Collecting/Filling TBStatistics Information === | |||

To collect the stats for the TBStatistics, different parts of the QEMU source code were changed. We list here how we collected each information for each field. | |||

==== TB_EXEC_STATS ==== | |||

'''Execution Count | '''Normal Execution Count''' | ||

To collect the execution count of each TB, we instrument the begin of each one of them, adding a call to a helper function called '''exec_freq'''. This is done | To collect the execution count of each TB, we instrument the begin of each one of them, adding a call to a helper function called '''exec_freq'''. This instrumentation is done in the '''gen_tb_exec_count''' function. The '''exec_freq''' helper receives the address of the TBStatistic structure linked to the TB. | ||

include/exec/gen-icount.h: | include/exec/gen-icount.h: | ||

static inline void gen_tb_exec_count(TranslationBlock *tb) | static inline void gen_tb_exec_count(TranslationBlock *tb) | ||

{ | { | ||

if ( | if (tb_stats_enabled(tb, TB_EXEC_STATS)) { | ||

TCGv_ptr ptr = tcg_const_ptr(tb->tb_stats); | TCGv_ptr ptr = tcg_const_ptr(tb->tb_stats); | ||

gen_helper_inc_exec_freq(ptr); | gen_helper_inc_exec_freq(ptr); | ||

| Line 72: | Line 127: | ||

} | } | ||

The helper function access the field executions.total of the TBStatistic structure and increment it atomically counting the execution of | The helper function access the field executions.total of the TBStatistic structure (which address was passed as a parameter) and increment it atomically counting one more execution of the TB. This is done on every execution start of a TB. | ||

accel/tcg/tcg-runtime.c: | accel/tcg/tcg-runtime.c: | ||

| Line 79: | Line 134: | ||

TBStatistics *stats = (TBStatistics *) ptr; | TBStatistics *stats = (TBStatistics *) ptr; | ||

g_assert(stats); | g_assert(stats); | ||

atomic_inc(&stats->executions.total); | atomic_inc(&stats->executions.normal); | ||

} | |||

'''Atomic Execution Count''' | |||

TBs can also be executed atomically and we count how many times this is done by incrementing the executions.atomic field every time cpu_exec_step_atomic is called. | |||

void cpu_exec_step_atomic(CPUState *cpu) | |||

.... | |||

if (tb_stats_enabled(tb, TB_EXEC_STATS)) { | |||

tb->tb_stats->executions.atomic++; | |||

} | |||

.... | |||

'''Coverage''' | |||

The field coverage of a TB measures the relative proportion of executed guest instructions that this TB represents when compared to the whole execution. To do so, we calculate these coverages of each TB before dumping any statistics by calling a function named calculate_last_search_coverages. This function basically counts the total number of guest instructions executed and then divide the number of guest instructions of each TB by the total getting the coverage percentage for that TB. | |||

static uint64_t calculate_last_search_coverages(void) | |||

{ | |||

uint64_t total_exec_count = 0; | |||

GList *i; | |||

/* Compute total execution count for all tbs */ | |||

for (i = last_search; i; i = i->next) { | |||

TBStatistics *tbs = (TBStatistics *) i->data; | |||

total_exec_count += | |||

(tbs->executions.atomic + tbs->executions.normal) * tbs->code.num_guest_inst; | |||

} | |||

for (i = last_search; i; i = i->next) { | |||

TBStatistics *tbs = (TBStatistics *) i->data; | |||

uint64_t tb_total_execs = | |||

(tbs->executions.atomic + tbs->executions.normal) * tbs->code.num_guest_inst; | |||

tbs->executions.coverage = (10000 * tb_total_execs) / (total_exec_count + 1); | |||

} | |||

return total_exec_count; | |||

} | } | ||

==== TB_JIT_STATS ==== | |||

'''Spanning''' | '''Spanning and Total Translations''' | ||

We count the number of translations of a region by incrementing its TBStatistics translations.total for every time that tb_gen_code is called. | |||

TBStatistics survive even after flushes and can be recovered and linked to a new translation of a region using its phys_pc, pc, cs_base and flags. | |||

We also count how many times a TB spans by atomically incrementing the translations.spanning field after the span. | |||

accel/tcg/translate-all.c: | accel/tcg/translate-all.c: | ||

| Line 92: | Line 188: | ||

uint32_t flags, int cflags) | uint32_t flags, int cflags) | ||

... | ... | ||

if (tb_stats_collection_enabled()) { | |||

tb->tb_stats = tb_get_stats(phys_pc, pc, cs_base, flags, tb); | |||

... | |||

if (flag & TB_JIT_STATS) { | |||

tb->tb_stats->stats_enabled |= TB_JIT_STATS; | |||

atomic_inc(&tb->tb_stats->translations.total); | |||

} | |||

... | |||

} | |||

... | |||

if ((pc & TARGET_PAGE_MASK) != virt_page2) { | if ((pc & TARGET_PAGE_MASK) != virt_page2) { | ||

phys_page2 = get_page_addr_code(env, virt_page2); | phys_page2 = get_page_addr_code(env, virt_page2); | ||

if (tb_stats_enabled(tb, TB_JIT_STATS)) { | |||

atomic_inc(&tb->tb_stats->translations.spanning); | |||

} | |||

} | } | ||

... | ... | ||

'''Guest Instructions''' | '''Guest Instructions''' | ||

The guest instructions are already counted in the DisasContextBase in the '''translator_loop''', so we simply | The guest instructions are already counted in the DisasContextBase (db->num_insns) in the '''translator_loop''', so we simply add it to the code.num_guest_inst. | ||

accel/tcg/translator.c: | accel/tcg/translator.c: | ||

| Line 110: | Line 216: | ||

CPUState *cpu, TranslationBlock *tb, int max_insns) | CPUState *cpu, TranslationBlock *tb, int max_insns) | ||

.... | .... | ||

db->tb->tb_stats->code.num_guest_inst | if (tb_stats_enabled(tb, TB_JIT_STATS)) { | ||

atomic_add(&db->tb->tb_stats->code.num_guest_inst, db->num_insns); | |||

} | |||

.... | .... | ||

'''Host | '''Host Code Size''' | ||

QEMU does not have any specific way of couting the number of host instructions. Moreover, we realize that implementing such function would need to modify many target-specific code and it would be unfeasible for this GSoC. So, instead, we count the size in bytes of the generated host code that is already calculated in the tb_gen_code function. | |||

accel/tcg/translate-all.c: | accel/tcg/translate-all.c: | ||

| Line 122: | Line 230: | ||

uint32_t flags, int cflags) | uint32_t flags, int cflags) | ||

... | ... | ||

if (tb_stats_enabled(tb, TB_JIT_STATS)) { | |||

if ( | atomic_add(&tb->tb_stats->code.out_len, gen_code_size); | ||

} | } | ||

... | ... | ||

'''TCG Instructions''' | '''TCG Instructions''' | ||

To count the number of TCG instructions we need only to iterate over the ops in the TCGContext and them store the result in the code.num_tcg_inst. | To count the number of TCG instructions we need only to iterate over the ops in the TCGContext and them store the result in the code.num_tcg_inst. We do this twice, before and after aplying the optimizations, filling num_tcg_ops and num_tcg_ops_opt. | ||

tcg/tcg.c: | tcg/tcg.c: | ||

int tcg_gen_code(TCGContext *s, TranslationBlock *tb) | int tcg_gen_code(TCGContext *s, TranslationBlock *tb) | ||

.... | .... | ||

if (tb_stats_enabled(tb, TB_JIT_STATS)) { | |||

int n = 0; | |||

QTAILQ_FOREACH(op, &s->ops, link) { | |||

n++; | |||

} | |||

atomic_add(&tb->tb_stats->code.num_tcg_ops, n); | |||

} | |||

.... | .... | ||

'''Spills''' | |||

We increment the code.spills counter after a load is generated to deal if a register spill in the function temp_sync. | |||

static void temp_sync(...) | |||

... | |||

case TEMP_VAL_REG: | |||

tcg_out_st(s, ts->type, ts->reg, | |||

ts->mem_base->reg, ts->mem_offset); | |||

/* Count number of spills */ | |||

if (tb_stats_enabled(s->current_tb, TB_JIT_STATS)) { | |||

atomic_inc(&s->current_tb->tb_stats->code.spills); | |||

} | |||

break; | |||

... | |||

==== TB_JIT_TIME and Old CONFIG_PROFILER Stats ==== | |||

Other fields in TBStatistics including the ones related to the measurement of time all came from an old global scheme of profiling of QEMU. This profiling was controlled by a CONFIG_PROFILER flag that should be set during compilation. We decided that all statistics should now concentrate in the TBStatistics structure and there was no reason to maintain two mechanisms of profiling. Moreover, instead of collecting these statistics globally we now collect them per TB, having more information and still being able to reconstruct the global profiling by summing up all TB stats. | |||

In each region of code where there was a #ifdef CONFIG_PROFILER follow by updates of global statistics, we changed to an update of the TB stats as shows the following example: | |||

-#ifdef CONFIG_PROFILER | |||

- atomic_set(&prof->restore_time, | |||

- prof->restore_time + profile_getclock() - ti); | |||

- atomic_set(&prof->restore_count, prof->restore_count + 1); | |||

-#endif | |||

+ if (tb_stats_enabled(tb, TB_JIT_TIME)) { | |||

--------- | + atomic_add(&tb->tb_stats->time.restore, profile_getclock() - ti); | ||

+ atomic_inc(&tb->tb_stats->time.restore_count); | |||

+ } | |||

=== Controling and Dumping TBStatistics === | |||

There are two ways of controlling the collection of statistics: before execution by using the -d tb_stats command argument or by using the tb_stats HMP command in the QEMU monitor. | |||

In both, you can choose which will be the default level of profiling collection of the tbs (exec_stats, jit_stats, jit_time, all). | |||

Further, the HMP tb_stats command can be also used to pause, stop or restart the profiling during the execution in system mode. | |||

To dump a list TBs stats, the HMP command "info tb-list" can be used. "info tb-list" receive as parameter the number of tbs to be dumped and which metric it should be sorted by: '''hotness, hg (host/guest), or spills'''. Follows an example of the output of three TBs stats sorted by hotness (execution frequency): | |||

===== info tb-list ===== | |||

TB id:1 | phys:0x34d54 virt:0x0000000000034d54 flags:0x0000f0 | |||

| exec:4828932/0 guest inst cov:16.38% | |||

| trans:1 ints: g:3 op:82 op_opt:34 spills:3 | |||

| h/g (host bytes / guest insts): 90.666664 | |||

| time to gen at 2.4GHz => code:3150.83(ns) IR:712.08(ns) | |||

| targets: 0x0000000000034d5e (id:11), 0x0000000000034d0d (id:2) | |||

TB id:2 | phys:0x34d0d virt:0x0000000000034d0d flags:0x0000f0 | |||

| exec:4825842/0 guest inst cov:21.82% | |||

| trans:1 ints: g:4 op:80 op_opt:38 spills:2 | |||

| h/g (host bytes / guest insts): 84.000000 | |||

| time to gen at 2.4GHz => code:3362.92(ns) IR:793.75(ns) | |||

| targets: 0x0000000000034d19 (id:12), 0x0000000000034d54 (id:1) | |||

TB id:3 | phys:0xec1c1 virt:0x00000000000ec1c1 flags:0x0000b0 | |||

| exec:872032/0 guest inst cov:1.97% | |||

| trans:1 ints: g:2 op:56 op_opt:26 spills:1 | |||

| h/g (host bytes / guest insts): 68.000000 | |||

| time to gen at 2.4GHz => code:1692.08(ns) IR:473.75(ns) | |||

| targets: 0x00000000000ec1c5 (id:4), 0x00000000000ec1cb (id:13) | |||

If necessary to iteratively examine one of this listed tbs the '''info tb id''' command can be used, where the id comes from the TBs listed by "info tb-list". The following example shows the result of dumping the guest instructions of tb with id 1. | |||

==== info tb ==== | |||

TB id:2 | phys:0x34d0d virt:0x0000000000034d0d flags:0x0000f0 | |||

| exec:6956495/0 guest inst cov:21.82% | |||

| trans:2 ints: g:2 op:40 op_opt:19 spills:1 | |||

| h/g (host bytes / guest insts): 84.000000 | |||

| time to gen at 2.4GHz => code:3130.83(ns) IR:722.50(ns) | |||

| targets: 0x0000000000034d19 (id:12), 0x0000000000034d54 (id:1) | |||

---------------- | ---------------- | ||

IN: | IN: | ||

0x00034d0d: 89 de movl %ebx, %esi | |||

0x00034d0f: 26 8b 0e movl %es:(%esi), %ecx | |||

0x00034d12: 26 f6 46 08 80 testb $0x80, %es:8(%esi) | |||

0x00034d17: 75 3b jne 0x34d54 | |||

------------------------------ | |||

In the example the guest instruction of the TB was dumped, but level of code being dumped can be select by a second parameter to "info tb" that can be: out_asm for dumping the host code of the TB, in_asm for dumping the guest code and op and op_opt for dumping the QEMU TCG IR for that TB. | |||

==== info cfg ==== | |||

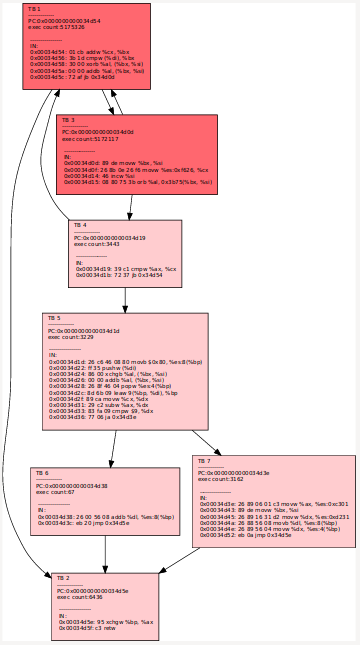

'''info tb''' only shows information of one TB, but '''info cfg id''' can be used to create a .dot representing the Control Flow Graph (CFG) of the neighborhood of a TB. Each node in the graph has the guest code of the TB and its execution frequency with the colors of the nodes representing its relative hotness in the neighborhood. The image below shows an example of such CFG. | |||

[[File:Info-cfg.png|center]] | |||

==== info coverset ==== | |||

Finally, there is an option to dump not the "n" hottest blocks but all necessary hot blocks to achieve m% of the total execution counting ('''info coverset m'''). This is a useful metric to understand the execution footprint of a program. More execution dense applications will have a smaller number of blocks to achieve m% of the execution while sparse ones will have a larger number. Dynamic compilers normally have better performance with applications with a small number of blocks needed to achieve the 90% coverset. | |||

TB id:1 | phys:0x34d54 virt:0x0000000000034d54 flags:0x0000f0 | |||

| exec:5202686/0 guest inst cov:11.28% | |||

| trans:1 ints: g:3 op:82 op_opt:34 spills:3 | |||

| h/g (host bytes / guest insts): 90.666664 | |||

| time to gen at 2.4GHz => code:2793.75(ns) IR:614.58(ns) | |||

| targets: 0x0000000000034d5e (id:3), 0x0000000000034d0d (id:2) | |||

TB id:2 | phys:0x34d0d virt:0x0000000000034d0d flags:0x0000f0 | |||

| exec:5199468/0 guest inst cov:15.03% | |||

| trans:1 ints: g:4 op:80 op_opt:38 spills:2 | |||

| h/g (host bytes / guest insts): 84.000000 | |||

| time to gen at 2.4GHz => code:2958.75(ns) IR:719.58(ns) | |||

| targets: 0x0000000000034d19 (id:4), 0x0000000000034d54 (id:1) | |||

------------------------------ | ------------------------------ | ||

2 TBs to reach 25% of guest inst exec coverage | |||

Total exec | Total of guest insts exec: 138346727 | ||

------------------------------ | ------------------------------ | ||

== Future Work | === Overheads === | ||

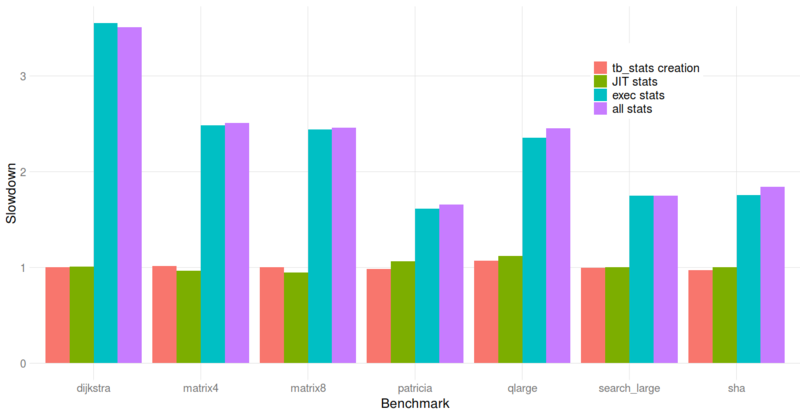

To understand the overhead of collecting these statistics we executed some benchmarks using four configuration variations in the qemu-x86-64-linux-user: | |||

* '''baseline:''' without any modification. | |||

* '''tb_stats creation:''' with the creation of tb_stats enabled. | |||

* '''jit stats:''' with the collection of JIT code and translation stats enabled. | |||

* '''exec stats:''' with the collection of exec count enabled. | |||

* '''all stats:''' with both exec count and JIT code/translation enabled. | |||

All values below are the median of 10 executions and they are all slowdowns in relation to the baseline value. | |||

[[File:Tbstats-overheads.png|800px|center]] | |||

The average slowdowns were: 0.7% for tb_stats creation, 1.7% for jit stats, 227% for exec stats and 231% for all. | |||

TODO: collect and compare memory usage. | |||

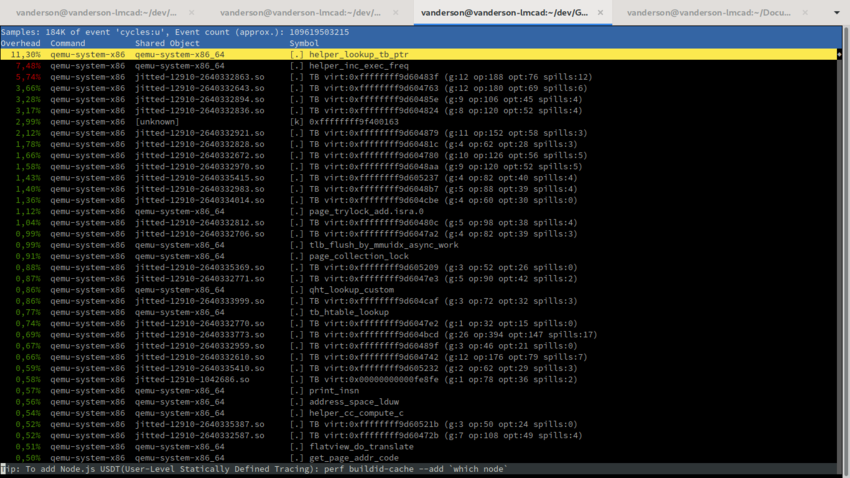

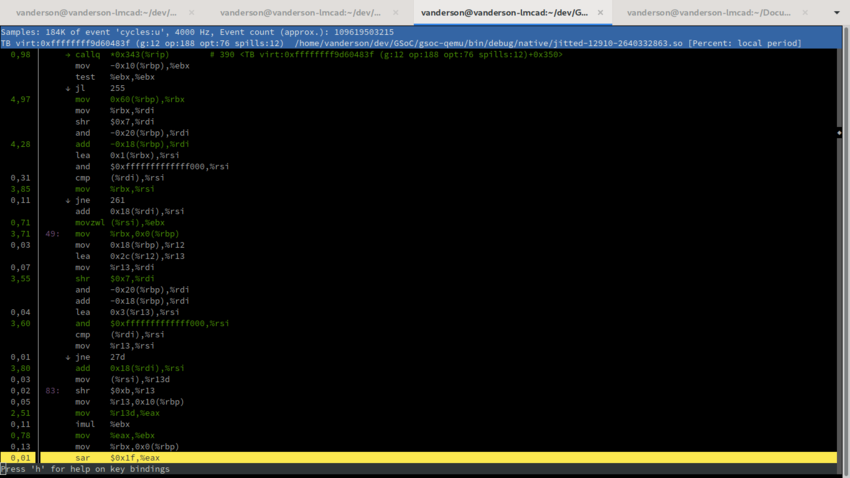

== Linux Perf Integration == | |||

Another tool added to QEMU to help investigate its performance was adding the option to dump a '''jitdump file''' using the command line argument -perf. This '''jitdump file''' can be used by Linux Perf to enhance the report of JITed code. Using such enhancement we can have the execution time relative percentage of a TB together with its guest PC. Moreover, we can explore the host code of the TB and observe which host instructions took more time in the execution. Both examples can seem in the following pictures: | |||

[[File:Perf-tbs-pcs.png|850px|center]] | |||

[[File:Perf-disas.png|850px|center]] | |||

To use perf with QEMU is as simple as using the following three commands: | |||

perf record -k 1 qemu-x86_64 -perf ./a.out | |||

perf inject -j -i perf.data -o perf.data.jitted | |||

perf report -i perf.data.jitted | |||

== Future Work == | |||

Future work would include: | Future work would include: | ||

* elide or beautify common blocks like softmmu access macros (which are always the same) | * elide or beautify common blocks like softmmu access macros (which are always the same) | ||

QEMU currently only works on translating simple basic blocks with one or two exit paths. This work could be a pre-cursor to supporting [[Internships/ProjectIdeas/Multi-exit Hot Blocks]] in the future. | * develop a safe way of collecting the gen host instructions count. | ||

* QEMU currently only works on translating simple basic blocks with one or two exit paths. This work could be a pre-cursor to supporting [[Internships/ProjectIdeas/Multi-exit Hot Blocks]] in the future. | |||

Latest revision as of 13:20, 14 February 2022

Measure Tiny Code Generation Quality

Status: Vanderson M. do Rosario <vandersonmr2@gmail.com> (vanderson on #qemu IRC) is working on this project for 2019 GSoC.

Mentor: Alex Bennée <alex.bennee@linaro.org> (stsquad on #qemu IRC)

Project Github: vandersonmr/gsoc-qemu [1]

Summary: in most applications, the majority of the execution time is spent in a very small portion of code. Regions of a code which have high-frequency execution are called hot while all other regions are called cold. As a direct consequence, emulators also spent most of their execution time emulating these hot regions and, so, dynamic compilers and translators need to pay extra attention to them. To guarantee that these hot regions are compiled/translated generating high-quality code is fundamental to achieve a final high-performance emulation. Thus, one of the most important steps in tuning an emulator performance is to identify which are the hot regions and to measure their translation quality.

TBStatistics (TBS)

Improving the code generation of the TCG backend is a hard task that involves reading through large amounts of text looking for anomalies in the generated code. It would be nice to have tools to more readily extract and parse code generation information. This would include options to dump:

- The hottest Translations Blocks (TB) and their execution count (total and atomic).

- Translation counters:

- The number of times a TB has been translated, uncached and spanned.

- The amount of time spent doing such translations and optimizations.

- Code quality metrics:

- The number of TB guest, IR (TCG ops), and host instructions.

- The Number of spills during the register allocation.

For that reason, we collect all this information dynamically for every TB or for a specific set of TBs and store it on TBStatistics structures. Every TB can have one TBStatistics linked to it by a new field inserted in the TranslationBlock structure[2]. Moreover, TBStatistics are not flushed during tb_flush and they survive longer being able to be relinked to retranslated TBs using their keys (phys_pc, pc, flags, cs_base) to matches new TBs and their TBStats.

Not all stats in a TBStatistics need to be being collected. The stats_enabled flag can be used to set which stats are going to be collected/are enabled to a particular TBStatistics. The possible flags can be found in quemu-commons.h and they are: TB_NOTHING, TB_EXEC_STATS (to collect code and translations stats), TB_JIT_STATS (to collect executions stats), TB_JIT_TIME (to collect time stats) and TB_PAUSED.

struct TBStatistics {

tb_page_addr_t phys_pc;

target_ulong pc;

uint32_t flags;

/* cs_base isn't included in the hash but we do check for matches */

target_ulong cs_base;

uint32_t stats_enabled;

/* Execution stats */

struct {

unsigned long normal;

unsigned long atomic;

/* filled only when dumping x% cover set */

uint16_t coverage;

} executions;

struct {

unsigned num_guest_inst;

unsigned num_tcg_ops;

unsigned num_tcg_ops_opt;

unsigned spills;

/* CONFIG_PROFILE */

unsigned temps;

unsigned deleted_ops;

unsigned in_len;

unsigned out_len;

unsigned search_out_len;

} code;

struct {

unsigned long total;

unsigned long spanning;

} translations;

struct {

int64_t restore;

uint64_t restore_count;

int64_t interm;

int64_t code;

int64_t opt;

int64_t la;

} time;

/* HMP information - used for referring to previous search */

int display_id;

/* current TB linked to this TBStatistics */

TranslationBlock *tb;

};

Creating and Storing TBStats

When a TB is going to be generated by calling tb_gen_code, we check if tbstats collection is enabled and if the current TB is not uncached. If so, we call tb_get_stats which either creates a new TBStatistics for that specific pc, phys_pc and flag or returns an already existing one (stored in a qht hash table in TBContext). Then, we get the default flags set through HMP or -d tb_stats argument. This default value will be used to set what stats are going to be collected for that TB.

/*

* We want to fetch the stats structure before we start code

* generation so we can count interesting things about this

* generation.

*/

if (tb_stats_collection_enabled() && !(tb->cflags & CF_NOCACHE)) {

tb->tb_stats = tb_get_stats(phys_pc, pc, cs_base, flags, tb);

uint32_t flag = get_default_tbstats_flag();

if (qemu_log_in_addr_range(tb->pc)) {

if (flag & TB_EXEC_STATS) {

tb->tb_stats->stats_enabled |= TB_EXEC_STATS;

}

}

if (flag & TB_JIT_STATS) {

tb->tb_stats->stats_enabled |= TB_JIT_STATS;

atomic_inc(&tb->tb_stats->translations.total);

}

if (flag & TB_JIT_TIME) {

tb->tb_stats->stats_enabled |= TB_JIT_TIME;

ti = profile_getclock();

}

} else {

tb->tb_stats = NULL;

}

Collecting/Filling TBStatistics Information

To collect the stats for the TBStatistics, different parts of the QEMU source code were changed. We list here how we collected each information for each field.

TB_EXEC_STATS

Normal Execution Count

To collect the execution count of each TB, we instrument the begin of each one of them, adding a call to a helper function called exec_freq. This instrumentation is done in the gen_tb_exec_count function. The exec_freq helper receives the address of the TBStatistic structure linked to the TB.

include/exec/gen-icount.h:

static inline void gen_tb_exec_count(TranslationBlock *tb)

{

if (tb_stats_enabled(tb, TB_EXEC_STATS)) {

TCGv_ptr ptr = tcg_const_ptr(tb->tb_stats);

gen_helper_inc_exec_freq(ptr);

tcg_temp_free_ptr(ptr);

}

}

The helper function access the field executions.total of the TBStatistic structure (which address was passed as a parameter) and increment it atomically counting one more execution of the TB. This is done on every execution start of a TB.

accel/tcg/tcg-runtime.c:

void HELPER(inc_exec_freq)(void *ptr)

{

TBStatistics *stats = (TBStatistics *) ptr;

g_assert(stats);

atomic_inc(&stats->executions.normal);

}

Atomic Execution Count

TBs can also be executed atomically and we count how many times this is done by incrementing the executions.atomic field every time cpu_exec_step_atomic is called.

void cpu_exec_step_atomic(CPUState *cpu)

....

if (tb_stats_enabled(tb, TB_EXEC_STATS)) {

tb->tb_stats->executions.atomic++;

}

....

Coverage

The field coverage of a TB measures the relative proportion of executed guest instructions that this TB represents when compared to the whole execution. To do so, we calculate these coverages of each TB before dumping any statistics by calling a function named calculate_last_search_coverages. This function basically counts the total number of guest instructions executed and then divide the number of guest instructions of each TB by the total getting the coverage percentage for that TB.

static uint64_t calculate_last_search_coverages(void)

{

uint64_t total_exec_count = 0;

GList *i;

/* Compute total execution count for all tbs */

for (i = last_search; i; i = i->next) {

TBStatistics *tbs = (TBStatistics *) i->data;

total_exec_count +=

(tbs->executions.atomic + tbs->executions.normal) * tbs->code.num_guest_inst;

}

for (i = last_search; i; i = i->next) {

TBStatistics *tbs = (TBStatistics *) i->data;

uint64_t tb_total_execs =

(tbs->executions.atomic + tbs->executions.normal) * tbs->code.num_guest_inst;

tbs->executions.coverage = (10000 * tb_total_execs) / (total_exec_count + 1);

}

return total_exec_count;

}

TB_JIT_STATS

Spanning and Total Translations

We count the number of translations of a region by incrementing its TBStatistics translations.total for every time that tb_gen_code is called. TBStatistics survive even after flushes and can be recovered and linked to a new translation of a region using its phys_pc, pc, cs_base and flags.

We also count how many times a TB spans by atomically incrementing the translations.spanning field after the span.

accel/tcg/translate-all.c:

TranslationBlock *tb_gen_code(CPUState *cpu,

target_ulong pc, target_ulong cs_base,

uint32_t flags, int cflags)

...

if (tb_stats_collection_enabled()) {

tb->tb_stats = tb_get_stats(phys_pc, pc, cs_base, flags, tb);

...

if (flag & TB_JIT_STATS) {

tb->tb_stats->stats_enabled |= TB_JIT_STATS;

atomic_inc(&tb->tb_stats->translations.total);

}

...

}

...

if ((pc & TARGET_PAGE_MASK) != virt_page2) {

phys_page2 = get_page_addr_code(env, virt_page2);

if (tb_stats_enabled(tb, TB_JIT_STATS)) {

atomic_inc(&tb->tb_stats->translations.spanning);

}

}

...

Guest Instructions

The guest instructions are already counted in the DisasContextBase (db->num_insns) in the translator_loop, so we simply add it to the code.num_guest_inst.

accel/tcg/translator.c:

void translator_loop(const TranslatorOps *ops, DisasContextBase *db,

CPUState *cpu, TranslationBlock *tb, int max_insns)

....

if (tb_stats_enabled(tb, TB_JIT_STATS)) {

atomic_add(&db->tb->tb_stats->code.num_guest_inst, db->num_insns);

}

....

Host Code Size

QEMU does not have any specific way of couting the number of host instructions. Moreover, we realize that implementing such function would need to modify many target-specific code and it would be unfeasible for this GSoC. So, instead, we count the size in bytes of the generated host code that is already calculated in the tb_gen_code function.

accel/tcg/translate-all.c:

TranslationBlock *tb_gen_code(CPUState *cpu,

target_ulong pc, target_ulong cs_base,

uint32_t flags, int cflags)

...

if (tb_stats_enabled(tb, TB_JIT_STATS)) {

atomic_add(&tb->tb_stats->code.out_len, gen_code_size);

}

...

TCG Instructions

To count the number of TCG instructions we need only to iterate over the ops in the TCGContext and them store the result in the code.num_tcg_inst. We do this twice, before and after aplying the optimizations, filling num_tcg_ops and num_tcg_ops_opt.

tcg/tcg.c:

int tcg_gen_code(TCGContext *s, TranslationBlock *tb)

....

if (tb_stats_enabled(tb, TB_JIT_STATS)) {

int n = 0;

QTAILQ_FOREACH(op, &s->ops, link) {

n++;

}

atomic_add(&tb->tb_stats->code.num_tcg_ops, n);

}

....

Spills

We increment the code.spills counter after a load is generated to deal if a register spill in the function temp_sync.

static void temp_sync(...)

...

case TEMP_VAL_REG:

tcg_out_st(s, ts->type, ts->reg,

ts->mem_base->reg, ts->mem_offset);

/* Count number of spills */

if (tb_stats_enabled(s->current_tb, TB_JIT_STATS)) {

atomic_inc(&s->current_tb->tb_stats->code.spills);

}

break;

...

TB_JIT_TIME and Old CONFIG_PROFILER Stats

Other fields in TBStatistics including the ones related to the measurement of time all came from an old global scheme of profiling of QEMU. This profiling was controlled by a CONFIG_PROFILER flag that should be set during compilation. We decided that all statistics should now concentrate in the TBStatistics structure and there was no reason to maintain two mechanisms of profiling. Moreover, instead of collecting these statistics globally we now collect them per TB, having more information and still being able to reconstruct the global profiling by summing up all TB stats.

In each region of code where there was a #ifdef CONFIG_PROFILER follow by updates of global statistics, we changed to an update of the TB stats as shows the following example:

-#ifdef CONFIG_PROFILER - atomic_set(&prof->restore_time, - prof->restore_time + profile_getclock() - ti); - atomic_set(&prof->restore_count, prof->restore_count + 1); -#endif

+ if (tb_stats_enabled(tb, TB_JIT_TIME)) {

+ atomic_add(&tb->tb_stats->time.restore, profile_getclock() - ti);

+ atomic_inc(&tb->tb_stats->time.restore_count);

+ }

Controling and Dumping TBStatistics

There are two ways of controlling the collection of statistics: before execution by using the -d tb_stats command argument or by using the tb_stats HMP command in the QEMU monitor. In both, you can choose which will be the default level of profiling collection of the tbs (exec_stats, jit_stats, jit_time, all).

Further, the HMP tb_stats command can be also used to pause, stop or restart the profiling during the execution in system mode.

To dump a list TBs stats, the HMP command "info tb-list" can be used. "info tb-list" receive as parameter the number of tbs to be dumped and which metric it should be sorted by: hotness, hg (host/guest), or spills. Follows an example of the output of three TBs stats sorted by hotness (execution frequency):

info tb-list

TB id:1 | phys:0x34d54 virt:0x0000000000034d54 flags:0x0000f0 | exec:4828932/0 guest inst cov:16.38% | trans:1 ints: g:3 op:82 op_opt:34 spills:3 | h/g (host bytes / guest insts): 90.666664 | time to gen at 2.4GHz => code:3150.83(ns) IR:712.08(ns) | targets: 0x0000000000034d5e (id:11), 0x0000000000034d0d (id:2) TB id:2 | phys:0x34d0d virt:0x0000000000034d0d flags:0x0000f0 | exec:4825842/0 guest inst cov:21.82% | trans:1 ints: g:4 op:80 op_opt:38 spills:2 | h/g (host bytes / guest insts): 84.000000 | time to gen at 2.4GHz => code:3362.92(ns) IR:793.75(ns) | targets: 0x0000000000034d19 (id:12), 0x0000000000034d54 (id:1) TB id:3 | phys:0xec1c1 virt:0x00000000000ec1c1 flags:0x0000b0 | exec:872032/0 guest inst cov:1.97% | trans:1 ints: g:2 op:56 op_opt:26 spills:1 | h/g (host bytes / guest insts): 68.000000 | time to gen at 2.4GHz => code:1692.08(ns) IR:473.75(ns) | targets: 0x00000000000ec1c5 (id:4), 0x00000000000ec1cb (id:13)

If necessary to iteratively examine one of this listed tbs the info tb id command can be used, where the id comes from the TBs listed by "info tb-list". The following example shows the result of dumping the guest instructions of tb with id 1.

info tb

TB id:2 | phys:0x34d0d virt:0x0000000000034d0d flags:0x0000f0 | exec:6956495/0 guest inst cov:21.82% | trans:2 ints: g:2 op:40 op_opt:19 spills:1 | h/g (host bytes / guest insts): 84.000000 | time to gen at 2.4GHz => code:3130.83(ns) IR:722.50(ns) | targets: 0x0000000000034d19 (id:12), 0x0000000000034d54 (id:1) ---------------- IN: 0x00034d0d: 89 de movl %ebx, %esi 0x00034d0f: 26 8b 0e movl %es:(%esi), %ecx 0x00034d12: 26 f6 46 08 80 testb $0x80, %es:8(%esi) 0x00034d17: 75 3b jne 0x34d54 ------------------------------

In the example the guest instruction of the TB was dumped, but level of code being dumped can be select by a second parameter to "info tb" that can be: out_asm for dumping the host code of the TB, in_asm for dumping the guest code and op and op_opt for dumping the QEMU TCG IR for that TB.

info cfg

info tb only shows information of one TB, but info cfg id can be used to create a .dot representing the Control Flow Graph (CFG) of the neighborhood of a TB. Each node in the graph has the guest code of the TB and its execution frequency with the colors of the nodes representing its relative hotness in the neighborhood. The image below shows an example of such CFG.

info coverset

Finally, there is an option to dump not the "n" hottest blocks but all necessary hot blocks to achieve m% of the total execution counting (info coverset m). This is a useful metric to understand the execution footprint of a program. More execution dense applications will have a smaller number of blocks to achieve m% of the execution while sparse ones will have a larger number. Dynamic compilers normally have better performance with applications with a small number of blocks needed to achieve the 90% coverset.

TB id:1 | phys:0x34d54 virt:0x0000000000034d54 flags:0x0000f0 | exec:5202686/0 guest inst cov:11.28% | trans:1 ints: g:3 op:82 op_opt:34 spills:3 | h/g (host bytes / guest insts): 90.666664 | time to gen at 2.4GHz => code:2793.75(ns) IR:614.58(ns) | targets: 0x0000000000034d5e (id:3), 0x0000000000034d0d (id:2) TB id:2 | phys:0x34d0d virt:0x0000000000034d0d flags:0x0000f0 | exec:5199468/0 guest inst cov:15.03% | trans:1 ints: g:4 op:80 op_opt:38 spills:2 | h/g (host bytes / guest insts): 84.000000 | time to gen at 2.4GHz => code:2958.75(ns) IR:719.58(ns) | targets: 0x0000000000034d19 (id:4), 0x0000000000034d54 (id:1) ------------------------------ 2 TBs to reach 25% of guest inst exec coverage Total of guest insts exec: 138346727 ------------------------------

Overheads

To understand the overhead of collecting these statistics we executed some benchmarks using four configuration variations in the qemu-x86-64-linux-user:

- baseline: without any modification.

- tb_stats creation: with the creation of tb_stats enabled.

- jit stats: with the collection of JIT code and translation stats enabled.

- exec stats: with the collection of exec count enabled.

- all stats: with both exec count and JIT code/translation enabled.

All values below are the median of 10 executions and they are all slowdowns in relation to the baseline value.

The average slowdowns were: 0.7% for tb_stats creation, 1.7% for jit stats, 227% for exec stats and 231% for all.

TODO: collect and compare memory usage.

Linux Perf Integration

Another tool added to QEMU to help investigate its performance was adding the option to dump a jitdump file using the command line argument -perf. This jitdump file can be used by Linux Perf to enhance the report of JITed code. Using such enhancement we can have the execution time relative percentage of a TB together with its guest PC. Moreover, we can explore the host code of the TB and observe which host instructions took more time in the execution. Both examples can seem in the following pictures:

To use perf with QEMU is as simple as using the following three commands:

perf record -k 1 qemu-x86_64 -perf ./a.out perf inject -j -i perf.data -o perf.data.jitted perf report -i perf.data.jitted

Future Work

Future work would include:

- elide or beautify common blocks like softmmu access macros (which are always the same)

- develop a safe way of collecting the gen host instructions count.

- QEMU currently only works on translating simple basic blocks with one or two exit paths. This work could be a pre-cursor to supporting Internships/ProjectIdeas/Multi-exit Hot Blocks in the future.