Features/PostCopyLiveMigration: Difference between revisions

| (19 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

== | == Feature Name == | ||

Post-copy based live migration | |||

(merged in 2.5) | (merged in 2.5) | ||

| Line 6: | Line 6: | ||

* '''Name:''' [[User:Dgilbert|Dave Gilbert]] | * '''Name:''' [[User:Dgilbert|Dave Gilbert]] | ||

* ''' Email:''' dgilbert@redhat.com | * ''' Email:''' dgilbert@redhat.com | ||

* ''' IRC: ''' davidgiluk (oftc/freenode) | |||

== Current Status == | == Current Status == | ||

| Line 14: | Line 15: | ||

A postcopy implementation that allows migration of guests that have large page change rates (relative to the available bandwidth) to be migrated in a finite time. | A postcopy implementation that allows migration of guests that have large page change rates (relative to the available bandwidth) to be migrated in a finite time. | ||

VMs of any size running any workload can be migrated. | VMs of any size running any workload can be migrated. | ||

postcopy means the VM starts running on the destination host as soon as possible, and the RAM from the source host is page faulted into the destination over time. This ensures there is a minimal downtime for the VM as compared to precopy, where the migration can take a lot of time depending on the workload and page dirtying rate of the VM. | |||

== Scope == | |||

* Changes in QEMU, OS support required | |||

* On Linux: userfaultfd in the 4.3 kernel is needed (Now supported on most architectures, tested mainly on x86, some testing on Power and aarch64, s390 support being worked on) | |||

== How to use == | == How to use == | ||

Enables postcopy mode before the start of migration: | Enables postcopy mode before the start of migration: | ||

migrate_set_capability | migrate_set_capability postcopy-ram on | ||

Start the migration as normal: | Start the migration as normal: | ||

migrate tcp:destination:port | migrate tcp:destination:port | ||

Change into postcopy mode; this can be issued any time after the start of migration, for most workloads it's best to wait until one cycle of RAM migration has completed (i.e. the sync count hits 2 in info migrate). | |||

Change into postcopy mode; this can be issued any time after the start of migration, for most workloads it's best to wait until one cycle of RAM migration has completed (i.e. the sync count hits 2 in info migrate). | |||

If you're using the HMP (Human Monitor Command), issue: | |||

migrate_start_postcopy | migrate_start_postcopy | ||

Or, if you're using the QMP (QEMU Machine Protocol), issue this equivalent command: | |||

migrate-start-postcopy | |||

NB: When using QMP You might want to use a convenient script like [http://git.qemu.org/?p=qemu.git;a=blob;f=qapi-schema.json;h=8b1a423 qmp-shell] (from QEMU Git source). | |||

== Added commands / state == | |||

* Migration capability: postcopy-ram | |||

* New command: migrate_start_postcopy (HMP) / migrate-start-postcopy (QMP) | |||

* New migration state: postcopy-active | |||

== Design == | == Design == | ||

This postcopy implementation uses the Linux ' | This postcopy implementation uses the Linux 'userfaultfd' kernel mechanisms from Andrea Arcangeli; it's not specific | ||

to | to postcopy and is designed to allow use with all of the standard kernel features (like transparent huge pages, KSM etc). | ||

It requires Linux 4.3 or newer. | It requires Linux 4.3 or newer. | ||

At the start of postcopy mode, all RAM Blocks are registered with 'userfaultfd' so that any accesses to those pages cause the accessing thread to pause. | |||

A separate thread reads messages from the kernel about pages that have been accessed and forwards requests to the source host. The source host | |||

queues those requests and sends the pages ahead of any background page sending (which still carries on during postcopy mode). As pages arrive | |||

on the destination they are placed into memory using new ioctl's that are associated with userfaultfd that cause the page to be copied into the | |||

address space atomically and the paused threads to continue. | |||

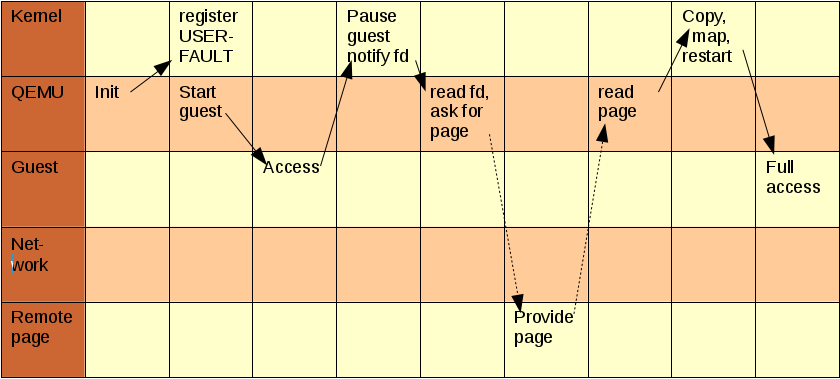

[[File:postcopyflow.png]] | [[File:postcopyflow.png]] | ||

| Line 45: | Line 70: | ||

* 'command' section type for sending migration commands that don't directly reflect guest state; this is used to send messages that move through different phases of postcopy and is expandable for use by others. | * 'command' section type for sending migration commands that don't directly reflect guest state; this is used to send messages that move through different phases of postcopy and is expandable for use by others. | ||

* 'return path' a method for the destination to send messages back to the source; used for postcopy page requests, and allows the destination to signal failure back to the source; this is currently supported on TCP and fd (where the fd is socket backed). | * 'return path' a method for the destination to send messages back to the source; used for postcopy page requests, and allows the destination to signal failure back to the source; this is currently supported on TCP and fd (where the fd is socket backed). | ||

* ' | * 'unsent map' a bitmap on the source populated with the set of all pages that have not-already been transmitted | ||

* ' | * A 'package' command which holds a subset of the migration stream. The state of all non-iterative devices (i.e. disk, timer, CPU etc) are stored in a 'package' and sent as one blob across the migration stream. The destination reads this package off the stream, thus freeing the stream for any page requests that are required while loading the devices themselves. | ||

* 'discard' commands cause some pages that have already been transmitted to be discarded at the start of the postcopy phase. These are pages that have been transmitted and redirtied, or (on Power/aarch64/anything else where host-page size is larger than target-page size) host pages that have been partially transmitted. | |||

== Conflicts == | |||

* RDMA: we need to find a way to get pages coming via RDMA to appear atomically; probably by copying into a temporary buffer and then being placed. | |||

* File backed memory areas (e.g. HugeTLBFS): Requires kernel support, also needs a mechanism to transmit huge pages as required (HugeTLBFS supported as of 2.9) | |||

* multi-threaded compression: The decompression currently decompresses straight into memory; we need it to place the pages atomically when in postcopy | |||

* The transport in use must support messages in the opposite direction | |||

* Shared memory | |||

== | == Future Enhancements == | ||

* optimization - rate limit the background page transmission to reduce the impact on the latency of postcopy page requests. | * optimization - rate limit the background page transmission to reduce the impact on the latency of postcopy page requests. | ||

* Integration with RDMA | * Integration with RDMA | ||

* Handle | * Handle mappings from files. | ||

* Handle shared memory | |||

== links == | == links == | ||

* [https://github.com/orbitfp7/ | * [https://github.com/orbitfp7/libvirt/commits/wp3-postcopy Libvirt work to drive postcopy] | ||

* [http://www.orbitproject.eu/ This work | * [https://github.com/orbitfp7/nova/commits/post-copy OpenStack work to drive postcopy] | ||

* [[Features/PostCopyLiveMigrationYabusame|Previous postcopy implementation as part of Yabusame]] | * [http://www.orbitproject.eu/ This work was part funded by the EU ORBIT project] | ||

* [[Features/PostCopyLiveMigrationYabusame | Previous postcopy implementation as part of Yabusame]] | |||

[[Category:Completed feature pages]] | |||

Latest revision as of 12:28, 16 March 2017

Feature Name

Post-copy based live migration (merged in 2.5)

owner

- Name: Dave Gilbert

- Email: dgilbert@redhat.com

- IRC: davidgiluk (oftc/freenode)

Current Status

- Last updated: 2015-11-24

- Released in: QEMU 2.5

Summary

A postcopy implementation that allows migration of guests that have large page change rates (relative to the available bandwidth) to be migrated in a finite time. VMs of any size running any workload can be migrated.

postcopy means the VM starts running on the destination host as soon as possible, and the RAM from the source host is page faulted into the destination over time. This ensures there is a minimal downtime for the VM as compared to precopy, where the migration can take a lot of time depending on the workload and page dirtying rate of the VM.

Scope

- Changes in QEMU, OS support required

- On Linux: userfaultfd in the 4.3 kernel is needed (Now supported on most architectures, tested mainly on x86, some testing on Power and aarch64, s390 support being worked on)

How to use

Enables postcopy mode before the start of migration:

migrate_set_capability postcopy-ram on

Start the migration as normal:

migrate tcp:destination:port

Change into postcopy mode; this can be issued any time after the start of migration, for most workloads it's best to wait until one cycle of RAM migration has completed (i.e. the sync count hits 2 in info migrate).

If you're using the HMP (Human Monitor Command), issue:

migrate_start_postcopy

Or, if you're using the QMP (QEMU Machine Protocol), issue this equivalent command:

migrate-start-postcopy

NB: When using QMP You might want to use a convenient script like qmp-shell (from QEMU Git source).

Added commands / state

- Migration capability: postcopy-ram

- New command: migrate_start_postcopy (HMP) / migrate-start-postcopy (QMP)

- New migration state: postcopy-active

Design

This postcopy implementation uses the Linux 'userfaultfd' kernel mechanisms from Andrea Arcangeli; it's not specific to postcopy and is designed to allow use with all of the standard kernel features (like transparent huge pages, KSM etc). It requires Linux 4.3 or newer.

At the start of postcopy mode, all RAM Blocks are registered with 'userfaultfd' so that any accesses to those pages cause the accessing thread to pause. A separate thread reads messages from the kernel about pages that have been accessed and forwards requests to the source host. The source host queues those requests and sends the pages ahead of any background page sending (which still carries on during postcopy mode). As pages arrive on the destination they are placed into memory using new ioctl's that are associated with userfaultfd that cause the page to be copied into the address space atomically and the paused threads to continue.

The guest page faults are asynchronous, so that multiple page faults can be outstanding at once allowing useful work to continue inspite of the latency of providing the page.

Major components

Where possible the design attempts to build reusable components that other features can reuse.

- 'command' section type for sending migration commands that don't directly reflect guest state; this is used to send messages that move through different phases of postcopy and is expandable for use by others.

- 'return path' a method for the destination to send messages back to the source; used for postcopy page requests, and allows the destination to signal failure back to the source; this is currently supported on TCP and fd (where the fd is socket backed).

- 'unsent map' a bitmap on the source populated with the set of all pages that have not-already been transmitted

- A 'package' command which holds a subset of the migration stream. The state of all non-iterative devices (i.e. disk, timer, CPU etc) are stored in a 'package' and sent as one blob across the migration stream. The destination reads this package off the stream, thus freeing the stream for any page requests that are required while loading the devices themselves.

- 'discard' commands cause some pages that have already been transmitted to be discarded at the start of the postcopy phase. These are pages that have been transmitted and redirtied, or (on Power/aarch64/anything else where host-page size is larger than target-page size) host pages that have been partially transmitted.

Conflicts

- RDMA: we need to find a way to get pages coming via RDMA to appear atomically; probably by copying into a temporary buffer and then being placed.

- File backed memory areas (e.g. HugeTLBFS): Requires kernel support, also needs a mechanism to transmit huge pages as required (HugeTLBFS supported as of 2.9)

- multi-threaded compression: The decompression currently decompresses straight into memory; we need it to place the pages atomically when in postcopy

- The transport in use must support messages in the opposite direction

- Shared memory

Future Enhancements

- optimization - rate limit the background page transmission to reduce the impact on the latency of postcopy page requests.

- Integration with RDMA

- Handle mappings from files.

- Handle shared memory