Features/RDMALiveMigration

Summary

Live migration using RDMA instead of TCP.

Contact

- Name: Michael Hines

- Email: mrhines@us.ibm.com

Description

Uses standard OFED software stack, which supports both RoCE and Infiniband.

Usage

Compiling:

$ ./configure --enable-rdma --target-list=x86_64-softmmu

$ make

Command-line on the Source machine AND Destination:

$ virsh qemu-monitor-command --hmp --cmd "migrate_set_speed 40g" # or whatever is the MAX of your RDMA device

Finally, perform the actual migration:

$ virsh migrate domain rdma:xx.xx.xx.xx:port

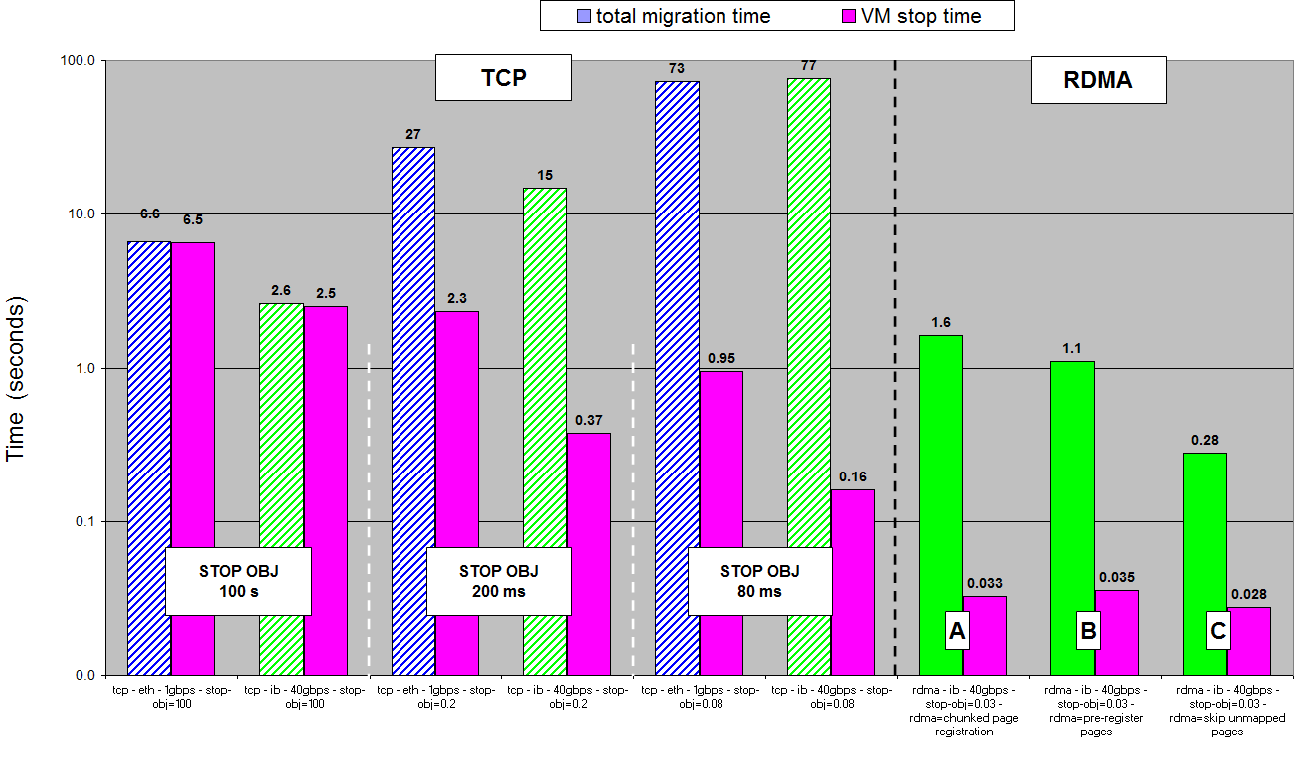

Performance

Protocol Design

- In order to provide maximum cross-device compatibility, we use the librdmacm library, which abstracts out the RDMA capabilities of each individual type of RDMA device, including infiniband, iWARP, as well as RoCE. This patch has been tested on both RoCE and infiniband devices from Mellanox.

- Currently, the XBZRLE capability and the detection of zero pages (dup_page()) significantly slow down the empircal throughput observed when RDMA is activated, so the code path skips these capabilities when RDMA is enabled. Hopefully, we can stop doing this in the future and come up with a way to preserve these capabilities simultaneously with the use of RDMA.

We use two kinds of RDMA messages:

1. RDMA WRITES (to the receiver) 2. RDMA SEND (for non-live state, like devices and CPU)

First, migration-rdma.c does the initial connection establishment using the URI 'rdma:host:port' on the QMP command line.

Second, the normal live migration process kicks in for 'pc.ram'.

During iterative phase of the migration, only RDMA WRITE messages are used. Messages are grouped into "chunks" which get pinned by the hardware in 64-page increments. Each chunk is acknowledged in the Queue Pairs completion queue (not the individual pages).

During iteration of RAM, there are no messages sent, just RDMA writes.

During the last iteration, once the devices and CPU is ready to be sent, we begin to use the RDMA SEND messages.

Due to the asynchronous nature of RDMA, the receiver of the migration must post Receive work requests in the queue *before* a SEND work request can be posted.

To achieve this, both sides perform an initial 'barrier' synchronization. Before the barrier, we already know that both sides have a receive work request posted, and then both sides exchange and block on the completion queue waiting for each other to know the other peer is alive and ready to send the rest of the live migration state (qemu_send/recv_barrier()). At this point, the use of QEMUFile between both sides for communication proceeds as normal.

The difference between TCP and SEND comes in migration-rdma.c: Since we cannot simply dump the bytes into a socket, instead a SEND message must be preceeded by one side instructing the other side *exactly* how many bytes the SEND message will contain.

Each time a SEND is received, the receiver buffers the message and divies out the bytes from the SEND to the qemu_loadvm_state() function until all the bytes from the buffered SEND message have been exhausted.

Before the SEND is exhausted, the receiver sends an 'ack' SEND back to the sender to let the savevm_state_* functions know that they can resume and start generating more SEND messages.

This ping-pong of SEND messages happens until the live migration completes.