Features/RDMALiveMigration

Summary

Live migration using RDMA instead of TCP.

Contact

- Name: Michael Hines

- Email: mrhines@us.ibm.com

Description

Uses standard OFED software stack, which supports both RoCE and Infiniband.

Design

- In order to provide maximum cross-device compatibility, we use the librdmacm library, which abstracts out the RDMA capabilities of each individual type of RDMA device, including infiniband, iWARP, as well as RoCE. This patch has been tested on both RoCE and infiniband devices from Mellanox.

- A new file named "migration-rdma.c" contains the core code required to perform librdmacm connection establishment and the transfer of actual RDMA contents.

- Files "arch_init.c" and "savevm.c" have been modified to transfer the VM's memory in the standard live migration path using RMDA memory instead of using TCP.

- Currently, the XBZRLE capability and the detection of zero pages (dup_page()) significantly slow down the empircal throughput observed when RDMA is activated, so the code path skips these capabilities when RDMA is enabled. Hopefully, we can stop doing this in the future and come up with a way to preserve these capabilities simultaneously with the use of RDMA.

- All of the original logic for migration of devices and protocol synchronization does not change - that happens simultaneously over TCP as it normally does.

Usage

Compiling:

$ ./configure --enable-rdma --target-list=x86_64-softmmu $ make

Command-line on the Source machine AND Destination: $ x86_64-softmmu/qemu-system-x86_64 ....... -rdmaport 3456 -rdmahost x.x.x.x

- "rdmport" is whatever you want

- "rdmahost" should be the destination IP address assigned to the remote interface with RDMA capabilities

- Both parameters should be identical on both machines

- "rdmahost" option should match on the destination because both sides use the same IP address to discover which RDMA interface

Optionally, you can use the QEMU monitor or libvirt to enable RDMA later. This is more flexible using libvirt, like this:

$ virsh qemu-monitor-command --hmp --cmd "migrate_set_rdma_host xx.xx.xx.xx" $ virsh qemu-monitor-command --hmp --cmd "migrate_set_rdma_port 3456"

Then, verify RDMA is activated before starting migration: $ virsh qemu-monitor-command --hmp --cmd "info migrate_capabilities" capabilities: xbzrle: off rdma: on

Also, make sure to increase the maximum throughput allowed: $ virsh qemu-monitor-command --hmp --cmd "migrate_set_speed 40g" # or whatever is the MAX of your RDMA device

Finally, perform the actual migration: $ virsh migrate domain tcp:xx.xx.xx.xx:TCPPORT # do NOT use the RDMA port, use the TCP port

- Note the difference in syntax here: We're using the TCP port, not the RDMA port.

- All the control and device migration logic still happens over TCP, but the memory pages and RDMA connection setup goes over the RDMA port.

If you're doing this on the command-line, you will then want to resume the VM: $ virsh qemu-monitor-command --hmp --cmd "continue"

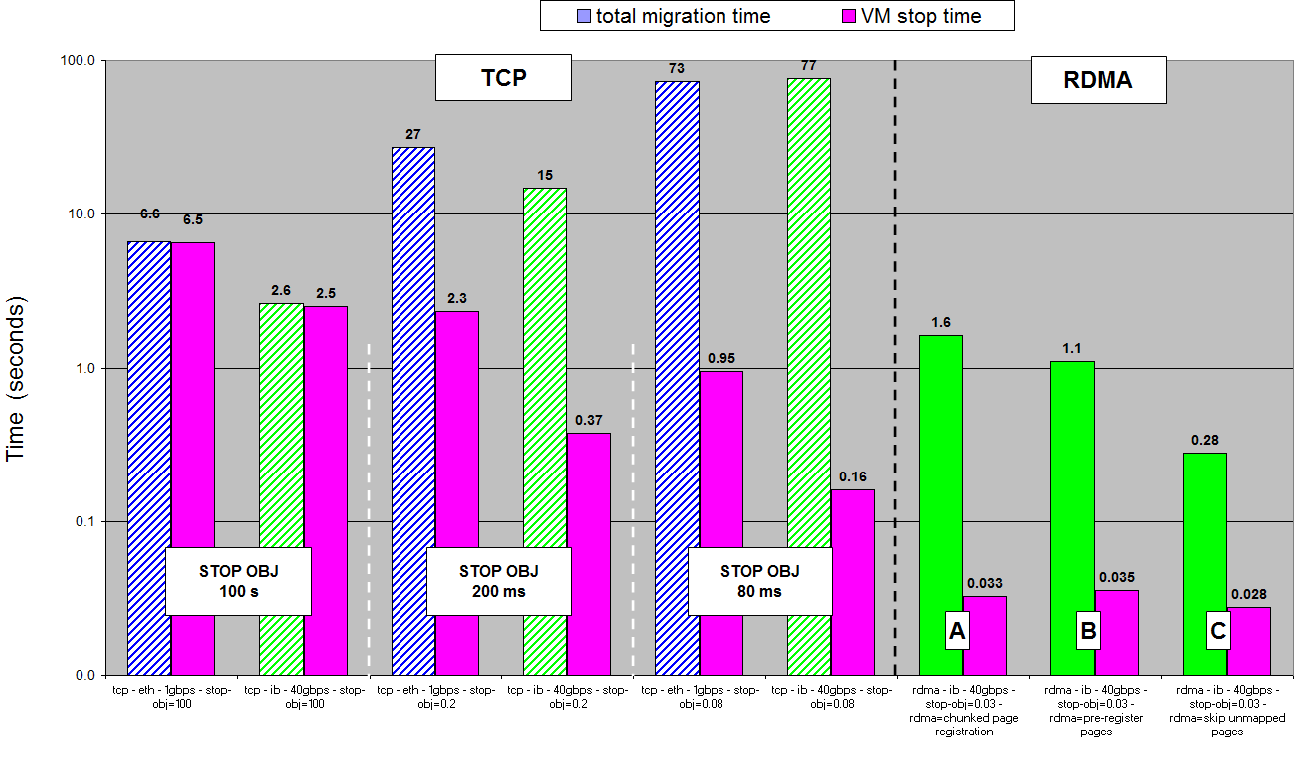

Performance

TODO

- Figure out how to properly cap RDMA throughput without copying data through a new type of QEMUFile abstraction or without artificially slowing down RDMA throughput because of control logic.

- Integrate with XOR-based run-length encoding (if possible)

- Stop skipping the zero-pages check