Features/FVD/Compare: Difference between revisions

No edit summary |

No edit summary |

||

| (6 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

[[Features/QED | QED]] is another new QEMU image format. Below is the FVD developer’s attempt to summarize the advantages of FVD over QED. The advantages of QED over FVD might be presented separately elsewhere. | [[Features/QED | QED]] is another new QEMU image format. Below is the FVD developer’s attempt to summarize the advantages of FVD over QED. The other side of the story, i.e., the advantages of QED over FVD, might be presented separately elsewhere. | ||

=Design Comparison= | =Design and Implementation Comparison= | ||

By design, FVD has the following advantages over QED: | By design, FVD has the following advantages over QED: | ||

| Line 13: | Line 13: | ||

:By contrast, FVD’s simple design makes all the following configurations possible: 1) only perform storage allocation in a host file system; 2) only perform storage allocation in FVD (directly on a logical volume without a host file system); 3) do storage allocation twice as that in existing image formats; or 4) FVD performs copy-on-write, copy-on-read, and adaptive prefetching, but delegates the function of storage allocation to any other QEMU image formats (assuming they are better in doing that). This flexibility allows FVD to support any use cases, even if unanticipated bizarre storage technology becomes main stream in the future (flash, [http://www-03.ibm.com/press/us/en/pressrelease/659.wss nano], or whatever). | :By contrast, FVD’s simple design makes all the following configurations possible: 1) only perform storage allocation in a host file system; 2) only perform storage allocation in FVD (directly on a logical volume without a host file system); 3) do storage allocation twice as that in existing image formats; or 4) FVD performs copy-on-write, copy-on-read, and adaptive prefetching, but delegates the function of storage allocation to any other QEMU image formats (assuming they are better in doing that). This flexibility allows FVD to support any use cases, even if unanticipated bizarre storage technology becomes main stream in the future (flash, [http://www-03.ibm.com/press/us/en/pressrelease/659.wss nano], or whatever). | ||

* QED relies on a [[Features/QED/OnlineDefrag | defragmentation algorithm]] to solve the fragmentation problem it introduces. Image-level defragmentation is an uncharted area without prior research or open-source implementation | * QED relies on a [[Features/QED/OnlineDefrag | defragmentation algorithm]] to solve the fragmentation problem it introduces. Image-level defragmentation is an uncharted area without prior research or open-source implementation and with many open questions. For example, how does QED’s defragmentation interact with a host file system’s defragmentation? How continuous will a partially full image be? Under a dynamic workload, how long will it take for defragmentation to settle down and what is the quantified defragmentation overhead or benefit? How to predict the overhead does not worth the benefit and skip defragmentation? Most importantly, why first artificially introduce fragmentation at the image layer and then try hard to defragment it? If users are empowered with choices, say, QEMU providing a fun option called “--fabricate-fragmentation-then-try-unproven-defrag”, how many users would want to enjoy this roller coaster ride and turn on the option? QED mandates setting this option on, whereas FVD allows turning it off. When FVD’s table is disabled, FVD uses a RAW-image-like data layout. | ||

* A QED image relies on a host file system and cannot be stored on a logical volume directly. | * A QED image relies on a host file system and cannot be stored on a logical volume directly. Using logical volume is a valid use case and is supported by libvirt. It is better to empower users with choices rather than restricting what they can do. With a proper design, supporting logical volume is actually quite simple, as shown by FVD. Results below show that using a logical volume improves PostMark’s file creation throughput by 45-53%. | ||

* QED needs more memory to cache on-disk metadata than FVD does, and introduces more disk I/Os to read on-disk metadata. For a 1TB image, QED’s metadata is 128MB, vs. FVD’s 6MB metadata (2MB for the bitmap and 4MB for the lookup table). If the lookup table is disabled, which is a preferred high-performance configuration, then it is only 2MB for FVD. | * QED needs more memory to cache on-disk metadata than FVD does, and introduces more disk I/Os to read on-disk metadata. For a 1TB image, QED’s metadata is 128MB, vs. FVD’s 6MB metadata (2MB for the bitmap and 4MB for the lookup table). If the lookup table is disabled, which is a preferred high-performance configuration, then it is only 2MB for FVD. | ||

* QED introduces more disk I/O overhead to update on-disk metadata than FVD does, due to several reasons. First, FVD’s journal converts multiple concurrent updates to sequential writes in the journal, which can be merged into a single write by the host Linux kernel. Second, FVD’s table can be (preferably) disabled and hence it incurs no update overhead. Even if the table is enabled, FVD’s chunk is much larger than QED’s cluster, and hence needs less updates. Finally, although QED and FVD use the same block/cluster size, FVD can be optimized to eliminate most bitmap updates with several techniques: A) Use resize2fs to reduce the base image to its minimum size (which is what a Cloud can do) so that most writes occur at locations beyond the size of the base image, without the need to update the bitmap; B) ‘qemu-img create’ can find zero-filled sectors in the base image and preset the corresponding bits of bitmap (see need_zero_init in the [[Features/FVD/Specification]]); and C) copy-on-read and prefetching do not update the on-disk bitmap and once prefetching finishes, there is no need for FVD to read or write the bitmap. See the [https://researcher.ibm.com/researcher/view_project.php?id=1852#download paper] for the details of these bitmap optimizations. In summary, when an FVD image is fully optimized (e.g., the table disabled and the base image is reduced to its minimum size), FVD has almost zero overhead in metadata update and the data layout is just like a RAW image. | * QED introduces more disk I/O overhead to update on-disk metadata than FVD does, due to several reasons. First, FVD’s journal converts multiple concurrent updates to sequential writes in the journal, which can be merged into a single write by the host Linux kernel. Second, FVD’s table can be (preferably) disabled and hence it incurs no update overhead. Even if the table is enabled, FVD’s chunk is much larger than QED’s cluster, and hence needs less updates. Finally, although QED and FVD use the same block/cluster size, FVD can be optimized to eliminate most bitmap updates with several techniques: A) Use resize2fs to reduce the base image to its minimum size (which is what a Cloud can do) so that most writes occur at locations beyond the size of the base image, without the need to update the bitmap; B) ‘qemu-img create’ can find zero-filled sectors in the base image and preset the corresponding bits of bitmap (see need_zero_init in the [[Features/FVD/Specification]]); and C) copy-on-read and prefetching do not update the on-disk bitmap and once prefetching finishes, there is no need for FVD to read or write the bitmap. See the [https://researcher.ibm.com/researcher/view_project.php?id=1852#download paper] for the details of these bitmap optimizations. In summary, when an FVD image is fully optimized (e.g., the table disabled and the base image is reduced to its minimum size), FVD has almost zero overhead in metadata update and the data layout is just like a RAW image. | ||

* FVD supports internal snapshot, while QED does not. Through a smart design, FVD gets all the benefits of QCOW2’s internal snapshot function but without incurring any of its overhead. | |||

* FVD has a mature and highly optimized implementation for on-demand image streaming, including adaptive prefetching and copy-on-read with minimal overhead. | |||

* FVD is the only fully asynchronous, non-blocking block device driver that exists for QEMU today. The driver of other image formats perform metadata read or write in a synchronous manner, which blocks the VM. This is one reason of FVD’s superior performance over other image formats. | |||

* FVD parallelizes I/Os to the maximum degree possible. For example, if processing a VM-generated read request needs to read data from the base image as well as several non-continuous chunks in the FVD image, FVD issues all I/O requests in parallel rather than sequentially. | * FVD parallelizes I/Os to the maximum degree possible. For example, if processing a VM-generated read request needs to read data from the base image as well as several non-continuous chunks in the FVD image, FVD issues all I/O requests in parallel rather than sequentially. | ||

| Line 30: | Line 36: | ||

[[File:fvd-vs-qed.jpg| left|600xpx]] | [[File:fvd-vs-qed.jpg| left|600xpx]] | ||

[[Category:Obsolete feature pages]] | |||

Latest revision as of 14:45, 11 October 2016

QED is another new QEMU image format. Below is the FVD developer’s attempt to summarize the advantages of FVD over QED. The other side of the story, i.e., the advantages of QED over FVD, might be presented separately elsewhere.

Design and Implementation Comparison

By design, FVD has the following advantages over QED:

- Like other existing image formats, QED does storage allocation twice, first by the image format and then by a host file system. This is a fundamental problem that FVD was designed to address.

- Most importantly, regardless of the underlying platform, QED insists on getting in the way and doing storage allocation in its naïve, one-size-fit-all manner, which is unlikely to perform well in many cases, because of the diversity of the platforms supported by QEMU. Storage systems have different characteristics (solid-state drive/Flash, DAS, NAS, SAN, etc), and host file systems (GFS, NTFS, FFS, LFS, ext2/ext3/ext4, reiserFS, Reiser4, XFS, JFS, VMFS, ZFS, etc) provide many different features and are optimized for different objectives (flash wear leveling, seek distance, reliability, etc). An image format should piggyback on the success of the diverse solutions developed by the storage and file systems community through 40 years of hard work, rather than insisting on reinventing a naïve, one-size-fit-all wheel to redo storage allocation.

- The interference between an image format and a host file system may make neither of them work well, even if either of them is optimal by itself. For example, what would happen when the image format and the host file system both perform online defragmentation simultaneously? If the image format’s storage allocation algorithm really works well, the image should be stored on a logical volume and the host file system should be disabled. If the host file system’s algorithm works well, the image format’s algorithm should be disabled. It is better not to use both at the same time to confuse each other.

- Obviously, doing storage allocation twice doubles the overhead: updating on-disk metadata twice, causing fragmentation twice, and caching metadata twice, etc.

- By contrast, FVD’s simple design makes all the following configurations possible: 1) only perform storage allocation in a host file system; 2) only perform storage allocation in FVD (directly on a logical volume without a host file system); 3) do storage allocation twice as that in existing image formats; or 4) FVD performs copy-on-write, copy-on-read, and adaptive prefetching, but delegates the function of storage allocation to any other QEMU image formats (assuming they are better in doing that). This flexibility allows FVD to support any use cases, even if unanticipated bizarre storage technology becomes main stream in the future (flash, nano, or whatever).

- QED relies on a defragmentation algorithm to solve the fragmentation problem it introduces. Image-level defragmentation is an uncharted area without prior research or open-source implementation and with many open questions. For example, how does QED’s defragmentation interact with a host file system’s defragmentation? How continuous will a partially full image be? Under a dynamic workload, how long will it take for defragmentation to settle down and what is the quantified defragmentation overhead or benefit? How to predict the overhead does not worth the benefit and skip defragmentation? Most importantly, why first artificially introduce fragmentation at the image layer and then try hard to defragment it? If users are empowered with choices, say, QEMU providing a fun option called “--fabricate-fragmentation-then-try-unproven-defrag”, how many users would want to enjoy this roller coaster ride and turn on the option? QED mandates setting this option on, whereas FVD allows turning it off. When FVD’s table is disabled, FVD uses a RAW-image-like data layout.

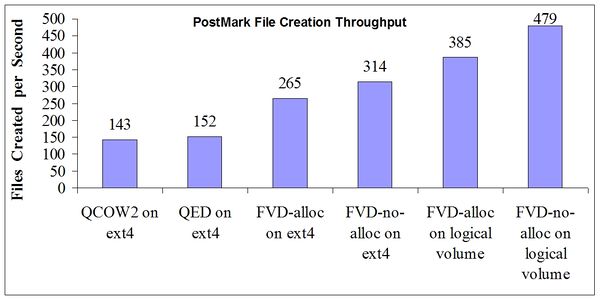

- A QED image relies on a host file system and cannot be stored on a logical volume directly. Using logical volume is a valid use case and is supported by libvirt. It is better to empower users with choices rather than restricting what they can do. With a proper design, supporting logical volume is actually quite simple, as shown by FVD. Results below show that using a logical volume improves PostMark’s file creation throughput by 45-53%.

- QED needs more memory to cache on-disk metadata than FVD does, and introduces more disk I/Os to read on-disk metadata. For a 1TB image, QED’s metadata is 128MB, vs. FVD’s 6MB metadata (2MB for the bitmap and 4MB for the lookup table). If the lookup table is disabled, which is a preferred high-performance configuration, then it is only 2MB for FVD.

- QED introduces more disk I/O overhead to update on-disk metadata than FVD does, due to several reasons. First, FVD’s journal converts multiple concurrent updates to sequential writes in the journal, which can be merged into a single write by the host Linux kernel. Second, FVD’s table can be (preferably) disabled and hence it incurs no update overhead. Even if the table is enabled, FVD’s chunk is much larger than QED’s cluster, and hence needs less updates. Finally, although QED and FVD use the same block/cluster size, FVD can be optimized to eliminate most bitmap updates with several techniques: A) Use resize2fs to reduce the base image to its minimum size (which is what a Cloud can do) so that most writes occur at locations beyond the size of the base image, without the need to update the bitmap; B) ‘qemu-img create’ can find zero-filled sectors in the base image and preset the corresponding bits of bitmap (see need_zero_init in the Features/FVD/Specification); and C) copy-on-read and prefetching do not update the on-disk bitmap and once prefetching finishes, there is no need for FVD to read or write the bitmap. See the paper for the details of these bitmap optimizations. In summary, when an FVD image is fully optimized (e.g., the table disabled and the base image is reduced to its minimum size), FVD has almost zero overhead in metadata update and the data layout is just like a RAW image.

- FVD supports internal snapshot, while QED does not. Through a smart design, FVD gets all the benefits of QCOW2’s internal snapshot function but without incurring any of its overhead.

- FVD has a mature and highly optimized implementation for on-demand image streaming, including adaptive prefetching and copy-on-read with minimal overhead.

- FVD is the only fully asynchronous, non-blocking block device driver that exists for QEMU today. The driver of other image formats perform metadata read or write in a synchronous manner, which blocks the VM. This is one reason of FVD’s superior performance over other image formats.

- FVD parallelizes I/Os to the maximum degree possible. For example, if processing a VM-generated read request needs to read data from the base image as well as several non-continuous chunks in the FVD image, FVD issues all I/O requests in parallel rather than sequentially.

Performance Comparison

The performance results below are preliminary, as both FVD and QED are moving targets. This figure shows that the file creation throughput of NetApp’s PostMark benchmark under FVD is 74.9% to 215% higher than that under QED. See the detailed experiment setup.

In this figure, “on ext4” means the image is stored on a host ext4 file system, whereas “on logical volume” means the image is stored on a logical volume directly without a host file system. “FVD-alloc” means FVD enables its lookup table and does storage allocation, whereas “FVD-no-alloc” means FVD disables its lookup table and performs no storage allocation by itself.